Spectral correlation is perhaps the most widely used characterization of the cyclostationarity property. The main reason is that the computational efficiency of the FFT can be harnessed to characterize the cyclostationarity of a given signal or data set in an efficient manner. And not just efficient, but with a reasonable total computational cost, so that one doesn’t have to wait too long for the result.

Just as the normal power spectrum is actually the power spectral density, or more accurately, the spectral density of time-averaged power (or simply the variance when the mean is zero), the spectral correlation function is the spectral density of time-averaged correlation (covariance). What does this mean? Consider the following schematic showing two narrowband spectral components of an arbitrary signal:

Let’s define narrowband spectral component to mean the output of a bandpass filter applied to a signal, where the bandwidth of the filter is much smaller than the bandwidth of the signal.

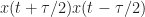

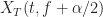

The sequence of shaded rectangles on the left are meant to imply a time series corresponding to the output of a bandpass filter centered at with bandwidth

Similarly, the sequence of shaded rectangles on the right imply a time series corresponding to the output of a bandpass filter centered at

with bandwidth

Let’s call the first time series and the second one

Since these time series, or signals, are bandpass in general, if we attempt to measure their correlation we will get a small value if

However, if we downconvert each of them to baseband (zero frequency), we will obtain lowpass signals, and there is the possibility that these new signals are correlated to some degree.

As a limiting case, suppose each of the signals were noiseless sine waves. By the construction of the figure, their frequencies must be different if

and so their correlation will be zero. However, if each sine wave is perfectly downconverted to baseband, the resulting signals are simply complex-valued constants, and the correlation between the two constant time series is high.

In general, the narrowband time series and

are not simple sine waves, but are sample paths of complicated random processes. Nevertheless, the correlation between separated spectral components–spectral correlation–is still a highly useful characterization of the signal for a large class of interesting signals.

The spectral components (the individual downconverted narrowband spectral components of the signal) are most often obtained through the use of the Fourier transform. As the transform of length slides along the signal

, it produces a number of downconverted spectral components with approximate bandwidth

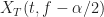

The two involved time series of interest are then renamed as

and

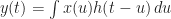

A measure of the spectral correlation is given by the limiting average of the cyclic periodogram, which is defined by

as the amount of processed data increases without bound, and then and only then the spectral resolution () is allowed to decrease to zero,

The limit spectral correlation function we just wrote down is a time-smoothed (time-averaged) cyclic periodogram. But the limit function can also be obtained by frequency smoothing the cyclic periodogram,

where is a unit-area pulse-like smoothing kernel (such as a rectangle). In (3), the symbol

denotes convolution.

The Significance of the Frequency A

The spectral correlation function (SCF) is typically zero for almost all real numbers

That is, for almost all signal types, most pairs of narrowband frequency components are uncorrelated. Those few

for which the SCF is not identically zero are called cycle frequencies (CFs). The set of SCF CFs is exactly the same as the set of cycle frequencies for the cyclic autocorrelation function (CAF)! That is, the separation between correlated narrowband signal components of

is the same as a frequency of a sine wave that can be generated by a quadratic nonlinearity (for example, a squarer or a delay-and-multiply device) operating on the original time-series data

The Cyclic Wiener Relationship

It can be shown that the Fourier transform of the CAF is equal to the SCF (The Literature [R1], My Papers [5,6]):

which is called the cyclic Wiener relationship. The Wiener relationship (sometimes called the Wiener-Khintchine theorem) is a name given to the more familiar Fourier transform relation between the conventional power spectral density and the autocorrelation

where is the conventional power spectrum and

is the conventional autocorrelation function.

It follows that the cyclic autocorrelation function is the inverse Fourier transform of the spectral correlation function,

and the conventional autocorrelation is the inverse transform of the power spectral density

The mean-square (power) of the time series (or variance if the time series has a mean value of zero) is simply the autocorrelation evaluated at

. This implies that the power of the time series is the integral of the power spectral density

Conjugate Spectral Correlation

This post has defined the non-conjugate spectral correlation function, which is the correlation between and

. (The correlation between random variables

and

is defined as

that is, the standard correlation includes a conjugation of one of the factors.)

The conjugate SCF is defined as the Fourier transform of the conjugate cyclic autocorrelation function,

From this definition, it can be shown that the conjugate SCF is the density of time-averaged correlation between and

where is the conjugate cyclic periodogram

The detailed explanation for why we need two kinds of spectral correlation functions (and, correspondingly, two kinds of cyclic autocorrelation functions) can be found in the post on conjugation configurations.

Illustrations

The SCF in Figure 2 is estimated (more on that estimation in another post) from a simulated BPSK signal with a bit rate of kHz and carrier frequency of

kHz. A small amount of noise is added to the signal prior to SCF estimation. The non-conjugate SCF shows the power spectrum for

and the bit-rate SCF for

kHz. The conjugate SCF plot shows the prominent feature for the doubled-carrier cycle frequency

kHz, and features offset from the doubled-carrier feature by

kHz. More on the spectral correlation of the BPSK signal can be found here and here. The spectral correlation surfaces for a variety of communication signals can be found in this gallery post.

A closely related function called the spectral coherence function is useful for blindly detecting cycle frequencies exhibited by arbitrary data sets.

Now consider a similar signal: QPSK with rectangular pulses. Let’s switch to normalized frequencies (physical frequencies are divided by the sampling rate) here for convenience. The signal has a symbol rate of , a carrier frequency of

unit power, and a small amount of additive white Gaussian noise. A power spectrum estimate is shown in Figure 3.

Consider also four distinct narrowband (NB) components of this QPSK signal as shown in the figure. The center frequencies are

and

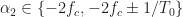

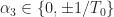

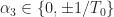

We know that this signal has non-conjugate cycle frequencies that are equal to harmonics of the symbol rate, or

for

. This means that the NB components with separations

are correlated. So if we extract such NB components “by hand” and calculate their correlation coefficients as a function of relative delay, we should see large results for the pairs

and

and small results for all other pairs drawn from the four frequencies. The correlation coefficient for two random variables

and

is

where is the expectation operator,

is the mean value of

,

is the mean value of

, and

and

are the standard deviations of

and

. Typically

. In the measurement below, we use temporal averaging in place of the expectation.

So let’s do that. We apply a simple Fourier-based ideal filter (ideal meaning rectangular passband) with center frequency frequency shift to complex baseband (zero center frequency), and decimate. The results are our narrowband signal components. Are they correlated when they should be and uncorrelated when they should be?

Here are the correlation-coefficient results:

Here the signals arise from the bandpass-filter center frequencies

So the spectral correlation concept is verified using this example: the only large correlation coefficients are those corresponding to a spectral-component center-frequency difference that is equal to a known cycle frequency for the QPSK signal (

). One can also simply plot the decimated shifted narrowband components and assess correlation visually:

Some readers question the need for the downconversion step before measuring correlation, so I’ve also performed our QPSK-based four-spectral-components measurement without doing the downconversion. That is, I just correlated the outputs of the four narrowband filters. The correlation coefficients and the outputs of the filters themselves (analogs of Figures 4 and 5) are shown in Figures 6 and 7. See also the discussion in the Comments section below.

Estimators

I’ve written several posts on estimators for the spectral correlation function; they are listed below. I think of them as falling into two categories: exhaustive and focused. For exhaustive spectral correlation estimators, the goal is to estimate the function over its entire (non-redundant) domain of definition as efficiently as possible. For focused estimators, the goal is to estimate the spectral correlation function for one or a small number of cycle frequencies with high accuracy and selectable frequency resolution.

Exhaustive Estimators

Strip Spectral Correlation Analyzer (SSCA)

Focused Estimators

The Frequency-Smoothing Method (FSM)

The Time-Smoothing Method (TSM)

Support the CSP Blog and Keep it Ad-Free

Please consider donating to the CSP Blog to keep it ad-free and to support the addition of major new features. The small box below is used to specify the number of $5 donations.

$5.00

I would like to know how I choose the parameters (time delay & cyclic frequency) to plot CAF in Matlab ?

Do you mean you want to plot the spectral correlation function? Or the cyclic autocorrelation?

I’m a bit confused because you mention the CAF but the comment is in response to the SCF post.

For either function, the cycle frequencies are, in the case of the post, the known cycle frequencies for the BPSK signal with bit rate of 333.3 kHz and carrier offset frequency of 100 kHz. This particular signal has a bandlimited pulse shape (not rectangular in the time domain, as we usually use on this site), so the non-conjugate cycle frequencies are +333.3, 0, -333.3 kHz. The conjugate cycle frequencies are 2×100 = 200 kHz, 200 – 333.3 kHz, and 200 + 333.3 kHz.

In your estimator, you need to use normalized frequencies at some point. In my case, the sampling rate was 1 MHz, so that the normalized bit rate is 1/3, and the normalized carrier offset is 0.1. That leads to normalized non-conjugate cycle frequencies of -1/3, 0, 1/3 and normalized conjugate cycle frequencies of 0.2, 0.2-1/3, and 0.2+1/3.

For the CAF, you can first create estimates of the SCF for each cycle frequency, then inverse Fourier transform them. The SCF estimates will correspond to all spectra frequencies between negative half the sampling rate and positive half the sampling rate, and transforming will then provide you with a CAF estimate over many distinct values of the lag variable. Using that method, you don’t have to choose any particular lags, you get them all.

Hi, Dr Spooner, I am confused about this: why is conjugate cycle frequencies: 2×100 = 200 kHz, rather than 100kHz, as the non-conjugate frequencies.

Because when you look at the periodic components of (“conjugate”) rather than of

(“conjugate”) rather than of  (“non-conjugate”) you get a factor that is sinusoidal with frequency

(“non-conjugate”) you get a factor that is sinusoidal with frequency  . You might want to look at these posts, which discuss why the cycle frequencies depend on the order of the moment/cumulant (

. You might want to look at these posts, which discuss why the cycle frequencies depend on the order of the moment/cumulant ( ), the number of conjugated factors (

), the number of conjugated factors ( ), and on the properties of the symbol random variable (“constellation”).

), and on the properties of the symbol random variable (“constellation”).

Conjugation Configurations

Cyclostationarity of Digital QAM and PSK

Why the CFs(alpha) for conjugate not: fs, fs+2fc, fs-2fc, as shown in ”Conjugation Configurations”, but 2fc, 2fc-fs, 2fc+fs as you show above?

There is no contradiction between the Conjugation Configuration post and this Spectral Correlation Post. If you use no conjugations, you’ll get and if you use two conjugations you’ll get

and if you use two conjugations you’ll get  .

.

I would like to know the formula of the threshold or the average to make the decision about spectrum sensing ? (likely as energy detector algorithm for spectrum sensing )

I don’t have a formula for the threshold for detection based on spectral correlation. I typically choose a threshold using empirical methods, which can take into account all the real-world deviations from a typical simple AWGN model. However, if you use the spectral coherence function, there is a way to compute a threshold that is based on some older work on cross spectral density measurements. I have a post in the works on how to compute and apply that thresholding formula. For now, look at The Literature [G. Carter, R64].

Hey Chad, I just want to say that this post really helped me understand what the spectral correlation function is conceptually (the correlation between different FFT frequency bins as they change over time). That first diagram was especially helpful. Thanks.

You’re welcome! Thank you for taking the time to write. Comments like yours keep me going.

Hi Chad. I think equation 8 lose a conjugate symbol. And can I regard X_T as complex envelope?

Eq (8) is correct as is. Notice that this is the conjugate cyclic periodogram, not the normal one (which is Eq (1)). You might want to read the post on conjugation configurations, which also explains why we need to consider a variety of numbers of conjugated terms when we study higher-order statistics for complex-valued signals. Yes, is the complex envelope.

is the complex envelope.

I see. Thank you.

Hi Dr Spooner,

Second bracket, right of eq (8) has (α/2-f) which is different from (f- α/2) as in eq (1). Please is this reversal correct? If it’s correct, is it because is the conjugate cyclic periodogram?

Kind regards,

Gabriel.

Yes, the reversal is correct. And you are right, it is because that quantity corresponds to the conjugate cyclic periodogram. Probably the easiest way to see this is to look at my post on the cyclic polyspectrum, and take the general th-order case to the specific case of

th-order case to the specific case of  and no conjugations.

and no conjugations.

I haven’t forgotten about your previous comment.

Thanks so much for your response. It’s very helpful.

What dose U mean in equation 2?

It is a variable with units of time, like , and is equal to the time interval over which the time-varying cyclic periodogram is averaged.

, and is equal to the time interval over which the time-varying cyclic periodogram is averaged.

Dear Chad, Thank you very much for your explanation. I followed several resources to understand the concept of spectral correlation, but those weren’t so helpful. Your post is the ideal.

Hey Chad,

Thanks for this valuable blog.

I have some difficulty to understand the physical meaning of the cyclic spectrum.

Let’s pick a specific point (f1,alpha1,a1) in the 3D spectral correlation figure, where f1 is the spectral frequency value, alpha1 is the cyclic frequency value, and a1 is the magnitude value. And Let’s say f1=10KHz and alpha1=100KHz. What these values are representing related to the original signal?

So represents the “idealized” correlation between the time-varying fluctuations in a very narrow band centered at

represents the “idealized” correlation between the time-varying fluctuations in a very narrow band centered at  kHz and the time-varying fluctuations in a second very narrowband centered at

kHz and the time-varying fluctuations in a second very narrowband centered at  kHz.

kHz.

Does that help?

For a specific alpha, the correlation has to be between 2 narrowband frequency components or it can be between 2 shifted versions of the whole spectrum?

For a specific and

and  , the correlation is between two narrowband components. But the spectral correlation function is a (typically continuous) function of

, the correlation is between two narrowband components. But the spectral correlation function is a (typically continuous) function of  and discrete function of

and discrete function of  , so if you compute the entire function, you get something that you might call a set of correlations between all possible shifts of the entire spectrum.

, so if you compute the entire function, you get something that you might call a set of correlations between all possible shifts of the entire spectrum.

If you fix , and let

, and let  vary over

vary over ![[-f_s/2, f_s/2]](https://s0.wp.com/latex.php?latex=%5B-f_s%2F2%2C+f_s%2F2%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) , then you’ll get a set of all the possible correlations between narrowband spectral components whose separation is

, then you’ll get a set of all the possible correlations between narrowband spectral components whose separation is  .

.

If you fix , and let

, and let  vary over

vary over ![[-f_s, f_s]](https://s0.wp.com/latex.php?latex=%5B-f_s%2C+f_s%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) , then you’ll get a set of all the possible correlations between narrowband spectral components whose center frequencies have average value

, then you’ll get a set of all the possible correlations between narrowband spectral components whose center frequencies have average value  .

.

OK that make sense, thanks very much

Hi, Chad,

Thanks for your post. I have a question regarding to “first time-series Y_1” and “second time-series Y_2”. Are they both the sequence of shaded rectangles on the right? Or Y_1 should be the left ones with center frequency f-A/2? Thanks!

Aaron:

Thanks for finding a typo in the Spectral Correlation post! Yes, is supposed be the sequence of rectangles centered at

is supposed be the sequence of rectangles centered at  , but I had written

, but I had written  and

and  . So I’ve changed the first

. So I’ve changed the first  to a

to a  .

.

Hi Chad,

Thanks for this wonderful blog. I am new to this cyclostationary signal analysis. I just wanted to know in case of QPSK signal whether taking a conjugate SCF will help us in estimating an unknown carrier frequency/Offset. Or have you covered parameter estimation using SOC in anywhere in detail?

Thanks for writing Deepu.

No, the conjugate spectral correlation function cannot be used to obtain a high-accuracy estimate of the carrier offset for QPSK (it can for BPSK). That is because QPSK signals, and other symmetric-constellation digital QAM/PSK signals (but not BPSK), do not possess conjugate cyclostationarity. You have to use higher-order cyclostationarity to create a high-accuracy estimator of the carrier offset.

For parameter estimation, I’ve only covered cycle-frequency estimation and time-difference-of-arrival estimation so far.

Thanks for the reply Dr Chad Spooner. But from your post regarding the “Conjugation Configuration” i have read that for second order quadrature signals possess conjugate cyclostationarity. I am quoting the content of that post.

“”This means that in the end, for second-order, we always need to consider the “no conjugations” case z(t+\tau_1)z(t+\tau_2) as well as the “one conjugation” case z(t+\tau_1)z^*(t+\tau_2), which provide us with the conjugate and non-conjugate cyclic autocorrelation and spectral correlation functions, respectively.”

Am I confused with something else here please help?

We always need to consider both the non-conjugate and conjugate cyclic autocorrelation and spectral correlation functions for any signal. However, the conjugate cyclic autocorrelation (and therefore the conjugate spectral correlation function) can be zero for a particular signal type. So in the cases of BPSK, MSK, OOK, and GMSK, the conjugate spectral correlation function is not zero for all possible cycle frequencies, but for QPSK, 16QAM, 8PSK, etc., it is zero. This situation is one of the motivating factors for studying and using higher-order cyclostationarity.

Does that help?

OK I got it. I have also verified the SCF equations for QAM modulation, which also shows that function is zero for non zero alpha. Thanks

Well, the conjugate SCF for QAM modulation (including QPSK) is zero for all cycle frequencies alpha, not just non-zero alpha.

When I obtain SCF for BPSK and QPSK(or any QAM), I get 4 and 2 peaks in the plot respectively? Can you explain why it is so?

Hey Ramesh. Can you elaborate on what you have done? For example, are you using real-valued or complex-valued BPSK, QPSK, QAM? Which “plot” are you referring to?

I believe I will be able to explain once I get a clear question.

In the meantime, have you read my posts on QAM/PSK? They might help answer your questions. Here they are:

QAM and PSK

SRRC Pulses

Gallery

And in the estimation of cyclic cumulants in other post. We get peaks at different values of \alpha. Can you explain why?

Hi, Dr.Spooner

For a complex valued QPSK signal, there is no peaks at its alpha domain (alpha = +/-2fc) because of real component and image component cancel each out. So, if we just take real part of received signal, is possible to use SCF to blindly estimate its carrier frequency ?

Thanks,

Best,

Andy

I don’t think so, as the ability to generate a carrier-related cycle frequency is dependent on the signal’s constellation, and that doesn’t change if you take the real part of the complex-valued signal representation. Let’s try a mathematical argument.

The baseband QAM/PSK signals are given by

which neglects the symbol-clock phase and the carrier phase

and the carrier phase  that I often include in the model. Not important here.

that I often include in the model. Not important here.

The complex-valued transmitted signal representation is

again neglecting carrier phase, and noting that . The actual real-valued transmitted-signal model is just the real part of

. The actual real-valued transmitted-signal model is just the real part of  , which is what you’re thinking of:

, which is what you’re thinking of:

or

Now you are suggesting that if we square , we might get at a cycle frequency that is related to the carrier (like the doubled-carrier cycle frequency of BPSK). So let’s take a look at

, we might get at a cycle frequency that is related to the carrier (like the doubled-carrier cycle frequency of BPSK). So let’s take a look at  .

.

or

The first-order cycle frequencies for can be found by applying the cyclic autocorrelation formula directly to the squared signal, resulting in terms like

can be found by applying the cyclic autocorrelation formula directly to the squared signal, resulting in terms like

So we see, then, that the cycle frequencies for are those for

are those for  ,

,  , and

, and  . This is just the analysis in the conjugation configuration post.

. This is just the analysis in the conjugation configuration post.

For BPSK, which has a constellation , the first-order cycle frequencies for the signal

, the first-order cycle frequencies for the signal  are:

are:

Term 1: (SRRC pulses)

(SRRC pulses)

Term 2:

Term 3:

And for QPSK,

Term 1:

Term 2:

Term 3:

This just means that if you take the FFT of , you’ll see the usual second-order cycle frequencies for the underlying signal type.

, you’ll see the usual second-order cycle frequencies for the underlying signal type.

If you apply a second-order analysis to , well, then you’re back to a fourth-order transformation of the original signal, which you are seeking to avoid.

, well, then you’re back to a fourth-order transformation of the original signal, which you are seeking to avoid.

Did I err?

Hi Dr Spooner,

Thank you for your posts. They are very useful.

I have a question on this topic.

Since the conjugate spectral correlation function is zero for QPSK, is it the same with offset QPSK (OQPSK) or staggered QPSK (SQPSK)?

Are there peaks at (+/- 2fc) and (2fc +/- 1/T) where 1/T is the symbol rate for OQPSK or SQPSK?

Kind regards,

Gabriel.

Good question. First, staggered QPSK (SQPSK) and offset QPSK (OQPSK) refer to the same modulation (which you probably already know, but other readers might not). It consists of two BPSK signals summed in phase quadrature (one baseband binary PAM multiplies a , the other multiplies a

, the other multiplies a  ). This is also true of QPSK. However, for OQPSK/SQPSK, the second binary PAM is delayed relative to the first by half a symbol width.

). This is also true of QPSK. However, for OQPSK/SQPSK, the second binary PAM is delayed relative to the first by half a symbol width.

The second thing to know here is that MSK is exactly a form of SQPSK/OQPSK. It is an OQPSK signal with pulses in the PAM signals that are half-sines. That is, they are shaped like half a period of a sine wave. So, MSK is exactly an OQPSK signal. This is useful because if we analyze generic OQPSK signals, we get the result for MSK (and GMSK) too. The tricky part of all this is that an MSK signal is a FSK signal, and it has an underlying bit rate (the rate of the PAM signal inside the ), whereas we usually talk about the symbol rate of an OQPSK signal. The bit rate of the MSK signal, when viewed as an FSK signal, is twice the symbol rate of the MSK signal, when viewed as an OQPSK signal. Or, the symbol rate is half the bit rate.

), whereas we usually talk about the symbol rate of an OQPSK signal. The bit rate of the MSK signal, when viewed as an FSK signal, is twice the symbol rate of the MSK signal, when viewed as an OQPSK signal. Or, the symbol rate is half the bit rate.

All of this is fairly well known. The staggering of the second PAM signal causes the second-order cycle-frequency pattern to deviate from that for BPSK or QPSK. Basically, every other feature is cancelled. So in the non-conjugate domain, we don’t see the symbol-rate feature, but do see the second harmonic of the symbol rate (if the pulse supports it). In the conjugate domain, we do not see the doubled carrier, but we do see the two features on either side of the doubled carrier.

So consider two signals. The first is an MSK signal with bit rate and carrier

and carrier  . The second is an OQPSK signal (SRRC pulses) with symbol rate

. The second is an OQPSK signal (SRRC pulses) with symbol rate  and carrier

and carrier  . So the symbol rate of the OQPSK signal is half the bit rate of the MSK signal, and therefore we should see similar cycle frequencies for the two signals:

. So the symbol rate of the OQPSK signal is half the bit rate of the MSK signal, and therefore we should see similar cycle frequencies for the two signals:

Hi Prof. Spooner,

Thank you so much for taking your time to give such detailed explanations and illustrations. This blog is highly commendable. It is difficult to get articles with such clarity. I came across a table in an article that presented cyclic features of some modulation types including OQPSK/SQPSK. It wasn’t easy to get clear explanations from many articles.

Yes , just as you have analyzed, the cyclic features of OQPSK/SQPSK were presented as similar to that of MSK in that table. Does that put OQPSK/SQPSK in a position of being analyzed by the second order cyclostationary structure? In other words, can the conjugate SCF be sufficiently used to analyze it with respect to the carrier frequencies? Something not possible with the normal QPSK. I hope that is right?

Once again, many thanks for your efforts in this blog.

King regards,

Gabriel.

Yes, absolutely. If you look at the graph from the previous comment I made, although the doubled-carrier feature is missing for MSK/GMSK/OQPSK, there are enough strong conjugate features that are related to the doubled carrier such that it is easy to get an estimate of the carrier provided you properly identify each feature. So, MSK/GMSK/SQPSK is easy to recognize and characterize using only second-order features.

If you really want a mind-bender, check out /4 DQPSK.

/4 DQPSK.

Great!! Many thanks for your quick response. Well appreciated.

Kind regards,

Gabriel.

Dear sir,

May I ask a question, could you upload the matlab code which generate the full size of normalized SCF ? I am a beginner level right now, it’s very hard for me to learn this just simply looking at those equations. I saw someone post a function called “autofam”. that function is really close to what I need (full size, and frequency are all normalized), but that is not normalized (the max SCF > 1) SCF. Thanks a lot in advance.

Sunson211:

Thanks for reading the CSP blog and for your comment. I don’t give out much code (some signal generation code and some machine-learning dataset-extraction code has been posted). The general rules I follow for providing help are laid out here.

Hi Chad, thanks for your reply. Sure, allow me to ask questions here if possible. But do you know how to attach some pictures here? It’s much easier if I can put some pictures. Thank you.

I’ve been working on it, but I don’t think it can be done at this time. You can take a look at the post that you want to comment on again, and see if there are new options for uploading an attachment. If not, you might do what some others have done and post your images to another site, such as imgur.com, and then paste a link to them in a comment to the CSP Blog.

And yes, you cannot create a post on the CSP Blog, you may only comment.

Btw, Chad. It seems that I only allow to give reply, but I cannot make a new post?

Sorry, I didn’t include the link of autofam.

https://github.com/sayguh/MastersProject/blob/master/matlab/sandbox/autofam.m Thank you.

Dear friends,

I have a general question about SCF. It is well known that SCF is very good for signal classification. So, my question is that, between original SCF, and normalized SCF, which one is better for classification purpose in practical ? Thank you.

I favor the coherence for detection of significant cycle frequencies and spectral correlation (and cyclic cumulants) for modulation recognition (signal-type classification).

got it! thank you!

Dear Chad Spooner,

I’ve just found your blog after decided to dive into the cyclo stationary process world. I think I will spend long hours reading through your posts. I would like to thank you very much for your contributions.

I would like to ask my first question if you don’t mind. What is the meaning of taking the FFT from the autocorrelation of an arbitrary signal vector (like MATLAB randn generated vector)? Is this similar to consider autocorrelation function with an alpha = 0?

Best Regards.

Thanks for visiting the CSP Blog Claudio!

Do you mean “taking the FFT of the autocorrelation”? The Fourier transform of the traditional autocorrelation function is the power spectrum. But maybe by “autocorrelation function” you mean the time-varying autocorrelation function. In that case, the Fourier transform of the time-varying autocorrelation where the transform is over the time variable , and not the lag variable

, and not the lag variable  , will reveal the different cyclic autocorrelation components.

, will reveal the different cyclic autocorrelation components.

Under the interpretation above, where “autocorrelation function” means “time-varying autocorrelation function”, if you take the FFT of the time-varying autocorrelation function and look at the FFT bin corresponding to zero frequency, you will have the normal stationary-signal autocorrelation value, which is also the cyclic autocorrelation function for .

.

I could help more if the question were made more precise. Do you think you can rephrase it?

Hi Chad Spooner,

Thanks for your feedback. You have made very clear statments and, from that, I noticed that I am missing critical fundamental concepts. I will think more, rephrase my questions, and get back soon.

Claudio:

Perhaps you should look at the time-domain posts:

The Cyclic Autocorrelation

Cyclic Cumulants

Estimating Temporal Moments and Cumulants

Hello Dr. Spooner,

I greatly enjoyed reading your blog and recently delved into the world of Cycostationary processing.

There is something that I do not yet understand regarding the SCF:

I implemented steps in MATLAB to calculate the CAF with input parameters such that I can specify the maximum Tau’s and Alpha’s to solve for. I also mirrored the CAF about Tau = 0 because of the symmetry of autocorrelation. I feel that I am ready to proceed to the next step and calculate the SCF. I thought this would be simple because it is widely known that the SCF is the Fourier transform of the CAF along Tau. However, when attempting to do this with a BPSK signal, I do not seem to get the expected results for the SCF. I see peaks at Alpha = +-2fc that are centered at f = +-2fc (instead of f = 0). I also get other peaks at Alpha = +-6fc and +-8fc.

The approach I took here is to FFT each column of the CAF matrix where each row represents a specific Tau and each column represents a specific Alpha. I then normalized the SCF by dividing by max(abs(SCF)).

I would greatly appreciate any clarifications that you can provide!

Thank You!

-John

Thanks for the question and your interest in CSP, John! I’m sure we can get your SCF estimator up and running.

Exactly how did you mirror the CAF? Did you obey the symmetry relation (4)?

I’m assuming you used a rectangular-pulse BPSK signal, as I recommend here. If so, do the plots of your cyclic autocorrelation function estimates match mine?

I obeyed the symmetry relation you mentioned by assuming that the non-conjugate CAF is symmetrical about Tau = 0. I did not worry much about the Alpha symmetry because I calculated all the Alphas of interest and did not mirror them. So to the best of my knowledge, I did obey the symmetry relation.

I tested my CAF algorithm with your BPSK signal and am able to replicate your output.

When taking the FFT of the CAF, should I take the FFT of the normalized CAF? Or should I not normalize it?

Any tips for obtaining the SCF would be extremely helpful!

Thank You!

Ah, but it isn’t. Look at Figure 1 and Eq. (5) in the symmetry post for example. Even the autocorrelation ( ) is not symmetric in

) is not symmetric in  . Its magnitude is symmetric about

. Its magnitude is symmetric about  , but the complex-valued function itself is not an even function of

, but the complex-valued function itself is not an even function of  . Figure 2 also shows the lack of symmetry of the complex function when

. Figure 2 also shows the lack of symmetry of the complex function when  . The magnitude is even.

. The magnitude is even.

What normalization do you refer to? If you multiply the CAF by a constant, and then take the Fourier transform, you’ll just get a scaled version of the spectral correlation function.

Hello Chad,

I see what I did wrong now and changed my approach of calculating the NC CAF based on symmetry:

I first calculate the CAF matrix from 0:Alpha_Max and from 0:Tau_Max. Then using the symmetry relations I find the other quadrants of the CAF. However, when I plot the abs, real, and imaginary parts for Alpha = Fbit I get something similar to Fig. 2 in your post on symmetry but not exact. My imaginary and real parts for Alpha = Fbit are more smooth and the magnitude for the imaginary part reaches a maximum of 0.1 (instead of ~0.3).

The code I am using for the CAF is as follows where xn is the input signal:

% Sampling Freq

fs = 1

% Define sampling period (s)

Ts = 1/fs;

% Define N, the number of points in x[n]

N = length(xn);

% n is the index for x[n]

n = 0:N-1;

% Define alpha

alpha = 0:dAlpha:alphaMax;

% Preallocation of matricies prior to for loop

RxTauAlpha = zeros(TauMax,length(alpha)); % Each column is different alpha

% For loop

% For loop iteration variable for tau

tauLoop = 0:TauMax-1;

for tau = tauLoop

for alpha_index = 1:length(alpha)

RxTauAlpha(tau+1,alpha_index) = (sum((xn(1:end-tau).*conj(xn(tau+1:end))).*exp(-1i*2*pi*alpha(alpha_index)*n(1:N-tau)*Ts))/(N-tau));

end

end

CAFq3 = -1.*real(RxTauAlpha)+1i.*imag(RxTauAlpha); %q3 is quadrant 3

Is there anything that I am not doing correctly to get the CAF from 0:Alpha_Max and 0:Tau_Max?

When I use the symmetry relations in this code to get the full CAF matrix and plot it, the result is as expected. When I try to get the SCF from the resulting matrix, the result is better than I had before but still not as expected (not getting diamond shaped region and peaks are at f = +-fc and alpha = +-2fc,+-3fc).

I presume Alpha_Max and Tau_Max are greater than zero. So your code estimates the asymmetric cyclic autocorrelation (see the last part of this reply) for the first quadrant of the plane. You can’t use the symmetry relations to fill in the remaining three quadrants of the plane. Recall Eq (4) from the symmetry post is

plane. You can’t use the symmetry relations to fill in the remaining three quadrants of the plane. Recall Eq (4) from the symmetry post is

or

which means you can find the values in the third quadrant from those in the first, but you can’t get at the values for the second and fourth quadrants. In your code, you negate the real part of the first-quadrant estimates and accept the imaginary part:

but the symmetry relation says that you should accept the real part and negate the imaginary part.

You are estimating the asymmetric CAF rather than the symmetric CAF, and so your real/imag plots won’t match mine in the symmetry post, and if you Fourier transform to obtain the SCF, you’ll not actually get the conventional SCF. See the discussion of symmetric and asymmetric CAFs and what you can do about it.

Hello Dr. Spooner,

Thank you so much for your guidance on my CAF/SCF estimator!

After following your advice, my estimators are producing the expected results.

I noticed that when plotting the SCF for one of my signals (BPSK, real-valued) I get some peaks that are at the edge (or corners) of the SCF beyond Alpha = 0.75Fs. Am I required to discard these peaks?

I do get the familiar diamond shaped region in the center of the plot so I figured that my algorithm works well.

Thank You!

-John Tyler

hello sir. i hope you will be fine.. sir i m working on modulation detection algorithm which can classify different modulation schemes such as qpsk. bpsk, fsk etc… so if you kindly help me out . i will be very grateful ..Thanks

I’ll certainly try if you ask a question. You might want to see my post on getting help.

Hi!

Help me please with some problem-I have a lof of time series of random signals in .txt file. I would like to calculate their Spectral Correlation Functions. But I have not software for to do it. What can I do in this situation?

Thanks for all.

The CSP Blog is primarily a “self-help” Blog. I recommend you write your own software using information from The Literature and my various “CSP Estimators” posts, which are tutorial versions of the algorithm descriptions you can find in the literature. See For the Beginner at CSP.

HI Dr Spooner! thank you for your post.

I have a question about the meaning of Y(t, f) at the beginning of this post.

If there is a random signal x(t) with power spectrum varying over time t, X(t, f)

I’ve understood Y(t, f) = X(t, f) H(f) (H(f) is bandpass filter with center freq. f, bandwidth B), am I right?

And I still can’t understand what exactly “suppose each of the Y_j(t, *) signals were noiseless sine waves.” means.

is this mean “random signal x(t) is sine wave”?

I’ll waiting for your response. thank you!

JunTaek:

Thank you for reading the CSP Blog and for your comment.

The function is the Fourier transform of a data block with width

is the Fourier transform of a data block with width  and center time

and center time  . The frequencies are denoted by

. The frequencies are denoted by  in this comment for clarity below. It is a sliding Fourier transform. This kind of transform is used as a building block for the conventional spectrogram, which forms

in this comment for clarity below. It is a sliding Fourier transform. This kind of transform is used as a building block for the conventional spectrogram, which forms  and displays it with the x-axis showing frequency

and displays it with the x-axis showing frequency  and the y-axis showing time

and the y-axis showing time  .

.

The idea of spectral correlation arises when we look at two elements of , one for frequency “bin”

, one for frequency “bin”  and the other for bin

and the other for bin  . Looking at the two time-series

. Looking at the two time-series  and

and  , we ask the question: Are these two functions of time correlated? If so, what is their correlation? What is their correlation coefficient?

, we ask the question: Are these two functions of time correlated? If so, what is their correlation? What is their correlation coefficient?

I brought up the thought experiment of imagining that those two time-series are just sine-waves themselves. What if each one is a sine wave with frequency exactly equal to the corresponding bin center? Then when we downconvert those two sine waves by an amount equal to the bin center, we get two functions that are constant over time–perfectly correlated, a correlation coefficient of one.

We can do that thought experiment without forcing itself to be the sum of two sine waves–there could be other things happening in the other bins of

itself to be the sum of two sine waves–there could be other things happening in the other bins of  .

.

Does that help?

Hi, Dr Spooner. I want to ask question in the sentence ”Consider also four distinct narrowband (NB) components of this QPSK signal as shown in the figure. ” –What does “four distinct narrowband” mean? Why should they appear in QPSK?

Thanks for the comment Charlie, and welcome to the CSP Blog!

I’ve updated the post a bit in an attempt to clarify the concept of “narrowband components.”

The term means the output of a lowpass or bandpass filter applied to arbitrary data, but where the bandwidth of the applied filter is much smaller than the bandwidth of the signal or data that is being filtered. So, all signals and all data inherently possess “narrowband spectral components.” If you could construct a sequence of ideal bandpass filters with non-overlapping support in frequency and whose union of support covered the signal’s bandwidth, then the collection of outputs from those filters would be a collection of narrowband spectral components, and together they would completely characterize the original input signal. In other words, the outputs of a typical filterbank or channelizer comprise “narrowband components.”

Does that help?

Thank you, sir. I mean, why are there four signals with different frequencies in the graph? QPSK should have only one frequency, with different phases that indicate different symbols, right? But there are four different peak signals in the garph, where do those come from?

Wait, you mean that the four signals are derived from the QPSK signal on the graph with narrow-band filters so that they have almost the same magnitude of the QPSK in respective points?

No. The passband gain of the bandpass filters is unity. The fact that the peaks of the power spectra for the four narrowband spectral components are very close to the corresponding power spectrum value for the QPSK signal is a consequence of the unity gain of the filters.

I suggest you look at my Signal Processing TookKit posts on linear time-invariant systems (filters) and look around the internet for information (tutorials) on linear systems like filterbanks.

Because I extracted four different narrowband components from the QPSK signal, each has bandwidth much less than the symbol rate of the QPSK signal, and each has a distinct center frequency. What I show in the graph is the power spectral density for each of those narrowband signals together with the power spectral density of the QPSK from whence they arise.

No. The only kind of signal that has “only one frequency” is a sine wave. All other signals have some non-zero bandwidth–the range of frequencies for which their power spectrum (for power signals) or Fourier transform magnitude (for energy signals) is non-zero. QPSK with square-root raised-cosine pulses has a bandwidth of , where

, where  is the roll-off parameter.

is the roll-off parameter.

I applied four distinct (complex) bandpass filters to the QPSK data. The outputs of those four filters are the narrowband spectral components I then analyze further in the post.

So, the thing is that, the output of the four signals on the graph is due to the filter on four different frequency points of the spectrum of QPSK, respectively?

The filter outputs for the narrowband QPSK signal components on the graph are due to the application of four distinct narrowband filters.

Hello, sir, I am curious about this: why two bands at frequencies f-A/2 and f+A/2 have small correlations, but if they are downconverted to zero frequencies, they would probably have larger correlation?

Because the effective sine-wave modulation of the lowpass time-series, implied by the lack of downconversion, results in the correlation of the envelope signal being obscured by the correlation between the two modulating sine waves. And the correlation between two sine waves with different frequencies is zero. You should try it for yourself–what we want to measure is the correlation between the envelopes of the extracted narrowband components, not the correlation between the narrowband components themselves.

Here are the correlation coefficients and time-domain plots for the same experiment I did above, but without the downconversion and the decimation:

Thanks a lot, Prof Spooner!

Could you please offer the matlab code of the above to demonstrate the correlation of the four signals?

No. The CSP Blog is a ‘self-help’ resource. If you are having trouble with extracting narrowband spectral components of a signal or some data record, please review linear systems and signal theory. Start here and follow the SPTK links.

Hi, Mr Spooner, could you please tell me : what do you mean by ”As the transform of length T slides along the signal x(t), it produces a number of downconverted spectral components with approximate bandwidth 1/T.” ? What does “slide” mean ?

I’m using slide here to mean a sequence of time shifts. If you think of convolution, where you’ve got , the function

, the function  slides along the

slides along the  axis as

axis as  is varied. Similarly, the moving-average filter has a rectangular impulse response function that is said to move, run, or slide along the x-axis defining the independent variable of the data to be averaged.

is varied. Similarly, the moving-average filter has a rectangular impulse response function that is said to move, run, or slide along the x-axis defining the independent variable of the data to be averaged.

In a 2D plot that shows the amplitude(the correlation) versus the frequency, where the alpha is fixed at 110Hz, two points mount– the frequency 50Hz and 60Hz– so the frequency of (-5Hz, 105Hz), and (5Hz, 115Hz) of narrowband spectral components are strongly correlated respectively, at a specific time, right?

I don’t know what you mean by ‘mount.’

Why is ‘frequency’ denoted by an ordered pair?

The narrowband components of a signal or data record are functions of time. They are correlated or uncorrelated only over time–correlation is an averaging operation.

I mean that in the 2D plot of correlation versus frequency, fixing alpha=110Hz, so the frequency=50Hz and frequency=60Hz has large peak, so the frequency 50+(110/2)Hz and frequency 50-(110/2)Hz have correlation, and frequency 60+(110/2)Hz and frequency 60-(110/2)Hz have correlation, right?

I mean that in the 2D plot of correlation versus frequency, fixing alpha=110Hz, and the frequency=50Hz and frequency=60Hz has large peak, so the frequency 50+(110/2)Hz and frequency 50-(110/2)Hz have correlation, and frequency 60+(110/2)Hz and frequency 60-(110/2)Hz have correlation, right?

I mean that in the 2D plot of Cycle spectrum amplitude versus frequency, fixing alpha=110Hz, and the frequency=50Hz and frequency=60Hz has large peak, so the frequency 50+(110/2)Hz and frequency 50-(110/2)Hz have correlation, and frequency 60+(110/2)Hz and frequency 60-(110/2)Hz have correlation, right?

I would say, no, the spectral components at are probably not correlated, but you’ve not supplied enough information to be sure.

are probably not correlated, but you’ve not supplied enough information to be sure.

Whether or not spectral components are correlated cannot be determined by looking at a power spectrum. In my example, I’m looking at a QPSK signal with symbol rate , so that spectral components that are separated by

, so that spectral components that are separated by  Hz are correlated. But you could apply a filter to white noise to form a stationary signal that has exactly the same PSD as that of the QPSK signal. No spectral components of that filtered noise signal will be correlated.

Hz are correlated. But you could apply a filter to white noise to form a stationary signal that has exactly the same PSD as that of the QPSK signal. No spectral components of that filtered noise signal will be correlated.

But if I extract a 2D plot(SCF amplitude-frequency),fixing alpha=110Hz, from a 3D plot(SCF-frequency-alpha), and there are 2 peaks at 50Hz, 60Hz, can I get some clues or information from it?–Sorry to bother you again i’m a first learner.

Ah, OK. Yes, if you have a high-quality estimate of the full SCF, or a theoretical plot (infinite-time average), and you fix at 110 Hz, then the peaks in the resulting 2D slice for that

at 110 Hz, then the peaks in the resulting 2D slice for that  do imply spectral correlation of specific narrowband spectral components. The peak at

do imply spectral correlation of specific narrowband spectral components. The peak at  implies the two narrowband spectral components with frequencies

implies the two narrowband spectral components with frequencies  are correlated. But beware false correlations–you might also want to check the spectral coherence surface when you don’t have a theoretical surface, but are working with finite-length data records. False peaks can happen because the power spectral density of the two involved spectral components are large, even when the associated spectral components are not actually correlated. For example, a sine wave in the data can be associated with false peaks in the spectral correlation surface. Sufficient averaging will reveal the falsity, but ‘sufficient’ might be much too big in practice.

are correlated. But beware false correlations–you might also want to check the spectral coherence surface when you don’t have a theoretical surface, but are working with finite-length data records. False peaks can happen because the power spectral density of the two involved spectral components are large, even when the associated spectral components are not actually correlated. For example, a sine wave in the data can be associated with false peaks in the spectral correlation surface. Sufficient averaging will reveal the falsity, but ‘sufficient’ might be much too big in practice.

Thanks a lot, Dc Spooner, for your patience and careful description. I get to know a lot after your teaching!!