In this post I present a very useful blind cycle-frequency estimator known in the literature as the strip spectral correlation analyzer (SSCA) (The Literature [R3-R5]). We’ve covered the basics of the frequency-smoothing method (FSM) and the time-smoothing method (TSM) of estimating the spectral correlation function (SCF) in previous posts. The TSM and FSM are efficient estimators of the SCF when it is desired to estimate it for one or a few cycle frequencies (CFs). The SSCA, on the other hand, is efficient when we want to estimate the SCF for all CFs.

See also an alternate method of efficient exhaustive SCF estimation: The FFT Accumulation Method.

The SSCA is a kind of time-smoothing method and a detailed examination of the method and its properties can be found in The Literature [R3-R5]. Here I’ll briefly describe the estimator mathematically, then try to explain why the rather odd-looking estimator form produces estimates of the spectral correlation function. Finally, I’ll provide a few processing examples. In future posts I’ll contrast the SSCA performance and computational cost with those for the FSM and TSM.

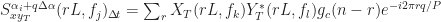

The block diagram for the SSCA looks like this:

Essentially, the input data is first channelized, and then each of the narrowband outputs of the channelizer is multiplied by the original input signal and Fourier transformed. The channelizer is usually implemented by a sliding FFT. So the method clearly harnesses the computational power and efficiency of the FFT, leading to its overall low cost compared to other exhaustive-CF spectral correlation estimators (such as the FSM and TSM when they are placed in a loop over cycle frequency).

Mathematically, a particular cross (between arbitrary signals and

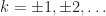

) spectral correlation function point estimate is given by

where the demodulates are defined as

Here we use the subscript to denote the total averaging time in seconds, so that

, where

is the sampling rate. And this notational choice connects well to other CSP Blog posts’ notations. Similarly, the subscript

denotes the length of time used in each of the channelizer’s FFTs, so that

. But we don’t really care about the absolute value of

, so the time-referencing subscripts aren’t so important going forward.

Let’s try to show that the SSCA point-estimate expression (1) can be manipulated into a form such that we can more easily see that the spectral correlation function is estimated. The first step is to substitute the expression for the demodulate into the SSCA point estimate and simplify,

Define . Then

Now, substitute , rename

to

, substitute

, and finally rename

to

:

Assume that . The inner sum is just an estimate of the cyclic autocorrelation, with data tapering (windowing) and with limits on the sum that depend on the lag

. This is the cyclic correlogram (The Literature [R5]). Thus, we have the following relation,

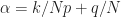

Here we can identify the pair from our usual parameterization of the spectral correlation function. First, we have

. The spectral frequency

is the argument of

, and is given by

.

Therefore, the point estimate is confirmed. We see that the time-smoothed SSCA estimate is approximately equal to a Fourier-transformed cyclic autocorrelation estimate. The requirement for reliability on this estimate is that the length of time used to estimate the cyclic correlogram, , is sufficiently large and much larger than the approximate span of the function, which is contained in the window

, which itself has width

. That is,

or

once again.

The demodulates are formed by short-duration () FFTs, which are often called the channelizer FFTs, and the final point-estimate outputs are formed by long (

) FFTs, which are often called the strip FFTs, because each one of those FFTs produces a set of point estimates that lie on strip parallel to the line

in the

plane.

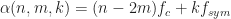

Numerical Example: Rectangular-Pulse BPSK

Let’s turn to our usual example: the textbook rectangular-pulse BPSK signal. This signal has normalized bit rate of and normalized carrier frequency of

. The non-conjugate cycle frequencies are harmonics of the bit rate, and so are equal to

, and the conjugate cycle frequencies are the non-conjugate cycle frequencies plus the doubled carrier

.

We process samples of the rectangular-pulse signal using a straightforward C-language implementation of the SSCA. The number of strips,

, is set to

. This SSCA processing results in

SCF point estimates for the non-conjugate SCF and another

for the conjugate SCF. However, symmetries allow us to look at the non-conjugate function for only the non-negative cycle frequencies. Nevertheless, there are a lot of point estimates in the example. Plotting all of them is a difficult exercise in visualization. Here I plot only the

pairs that correspond to the

largest values of spectral correlation magnitude:

And the 500 largest values of conjugate spectral correlation magnitude:

These two plots show that the algorithm is producing relatively large SCF values for the signal’s true cycle frequencies. There are also some relatively strong SCF magnitudes for cycle frequencies near zero (non-conjugate) and the doubled carrier (conjugate) that are not actual cycle frequencies of the textbook rectangular-pulse BPSK signal. These high values are due to the general and important phenomenon of cycle leakage, which we discuss and analyze in the post on resolutions in CSP. For now, think of cycle leakage as akin to spectral leakage in a normal spectrum analysis setting: the PSD estimate for frequencies near to a powerful sine wave signal will be inflated due to proximity to the sine wave and the imperfect frequency response of the estimator.

So, if the SSCA is applied to a new signal, whose cycle frequencies are not yet known, it is clear from the two graphs above that it could be used to blindly find the significant cycle frequencies, simply by thresholding the spectral correlation magnitude. However, there is a better way to sort through the large number of SSCA-produced spectral correlation point estimates, and that is by using the spectral coherence.

Computing and Using the Coherence in the SSCA

Recall from the post on the coherence that it is simply a normalized version of the spectral correlation, in exactly the same way that the correlation coefficient from statistics is a normalized version of the correlation. The non-conjugate coherence is given by

and the conjugate coherence is

The SSCA produces the values needed for the numerators of the coherences; all we need is the power spectrum values in the denominators. In principle, these can be obtained from the values of the power spectrum automatically produced during SSCA computation. However, it is often better to use a more finely sampled PSD estimate, such as can be obtained through use of the frequency-smoothing method or time-smoothing method of spectral correlation estimation, with the cycle frequency set to zero.

Once the coherence is computed in the SSCA implementation, it can be thresholded to yield the significant cycle frequencies. Moreover, a cycle-frequency binning operation can be applied to those cycle frequencies that pass threshold, limiting the output of the algorithm to only unique values of cycle frequency. For our rectangular-pulse BPSK signal, the top non-conjugate coherence values correspond to the following

pairs:

and for the conjugate coherence, we obtain

The false cycle frequencies do not pass through the thresholding process when the coherence is used!

After coherence-based thresholding and cycle-frequency binning, we obtain the following output for the non-conjugate cycle frequencies of our textbook rectangular-pulse BPSK signal:

Frequency Cycle Frequency Coherence SCF

and for the conjugate cycle frequencies:

Frequency Cycle Frequency Coherence SCF

Final Example: Captured CDMA-EVDO

There is an EVDO downlink signal at MHz where I live, and I have recorded several seconds of it for analysis purposes. A typical PSD for the captured signal is shown here:

After applying the SSCA to this EVDO data, we obtain the non-conjugate and conjugate cycle frequency sets shown in the following graphs:

We conclude that the signal has five strong non-conjugate cyclic features for cycle frequencies that are harmonics of MHz (not

MHz), and no significant conjugate cyclic features. The CDMA chip rate of

MHz is also present, but has smaller spectral correlation and spectral coherence than the feature at

MHz. This kind of analysis illustrates the power of the SSCA: Efficient (fast) blind estimation of all second-order cycle frequencies.

From what I understand the FAM and SCCA algorithm generates a tilted bifrequency plane, that being the value of alpha=0 lie across the primary diagonal of the matrix output, and there is only a single value for alpha = +/- Fs. This makes some sense, due to the fact that at least in the FAM I am conjugate multiplying each frequency bin column against all the other columns. Does that sound correct? Is there a proper technique for correcting this shift, so alpha=0 lies upon the x-axis? Would that cause points to lie upon Fs/(2*N) frequency spacing?

In a related question, when computing the spectral coherence (SOF), I can take the value of the SCF at alpha=0 which is essentially a PSD. I can take that and do circular shifts conjugate multiplies to get NxN matrix. In theory I can use that matrix to be the denominator in the SOF calculation, but since the FAM outputs in that diagonal fashion, how would I deal with that?

Also, is it a true statement that the SOF magnitude will always be 1 when alpha=0? Is the SCF or SOF magnitude always symmetric about the alpha=0 axis?

The difficulty here is that we must agree what “the matrix output” means to proceed. We can agree right now, however, that the SSCA proceeds strip-by-strip. Each strip FFT is the FFT of the product of the original complex-valued signal with one of the channelizer outputs. Each of these strip FFTs will contain a contribution to the PSD (alpha = 0). And each of the strip FFTs will contain N points, each of which corresponds to N different values of cycle frequency. So, I don’t agree with “there is only a single value for alpha = +/- Fs.”

What I do is create a table of SCF values as the SSCA proceeds with its strip FFTs. I end up with a data structure that has four elements: spectral frequency f, cycle frequency alpha, spectral correlation S, and coherence C. Then I can extract whatever “slices” I want from this structure.

Yes, you can use the SSCA-generated PSD values (those elements of the output that correspond to alpha = 0 in the non-conjugate SSCA output). However, there are only M of these values, one for each strip, and M << N, where N is the total number of samples processed (also the length of each strip FFT). So the spectral resolution of that effective PSD estimate is low (coarse) relative to the cycle resolution. This causes problems when the coherence quotient is formed, because the numerator and denominator have different resolutions. One way out of this is to form a stand-alone PSD estimate and use that for the denominator of the coherence. You can control the resolution of the stand-alone measurement.

I’m not sure about the FAM. I’ll need to look into it and do a FAM post!

Yes, the non-conjugate spectral coherence is always 1 for alpha=0, theoretically, provided that there is no point where the PSD is equal to zero. A good coherence estimator should always output very nearly 1 for this case. If it isn’t, you know you’ve got problems with your estimator, so definitely pay attention to this case.

You mean, is |S^(-a)(f)| = |S^(a)(f)|? Yes, for the non-conjugate SCF and coherence functions. But it is not true for the conjugate functions.

Thanks for the reply, I am new to this so still processing the concepts.

> And each of the strip FFTs will contain N points, each of which corresponds to N different values of cycle frequency. So, I don’t agree with “there is only a single value for alpha = +/- Fs.”

I am hung up on this concept. Once you do the strip FFTs, you get a resultant matrix which must then be mapped back into Sx in a particular way. Via this mapping, there appears to be only a single non-zero value at alpha=Fs, at F=0 and at alpha=-Fs, at f=0.

This mapping is mentioned in this reference http://www.dtic.mil/dtic/tr/fulltext/u2/a289815.pdf (24), and described in detail in section 3.3.3. It appears to essentially be a rotation mapping, where the region of support appears “diamond shaped” in the bifrequency plane. In the SSCA, the strips are diagonal slices. The highest alpha value in that region is alpha = Fs, which appears at f=0. Likewise at f=Fs/2 there is only a single value at alpha=0.

Does that make sense, or did I misinterpret things? I think each strip FFT does correspond to N different values of cycle frequency as you said, but each strip works at an offset of each other, so only one strip actually outputs alpha=-Fs, and the next strip outputs alpha=-Fs+1/Np/2, etc.

I think you are understanding just fine; I misinterpreted your earlier comment. Yes, that report by April has the diamond-shaped support region correct (this is independent of the SSCA of course), and you can see the diamond outline in the figures in my original post. I had forgotten about that report; thanks for reminding me aapocketz.

Hi there,

Thank you for the great informative posts related to this subject. I am a bit confused however regarding the frequency shifting of Sx by an amount of gamma=q*delta_alpha (see eq. 13 in aapocketz’ reference: http://www.dtic.mil/dtic/tr/fulltext/u2/a289815.pdf), such that the total frequency shifted expression can be evaluated using the FFT algorithm. Basically I just cannot mathematically figure out why multiplication of the TS cylic periodogram with exp[j*2*pi*gamma*m*Ts] relates to a shift in the value of alpha (one would expect an frequency shift such that Sx(n,f+gamma) ).

Thankfully your approach is the other way around, proofing that the SSCA provides us with an evaluation of the Fourier transform of the cyclic correlogram. What confuses me in your derivation though, it that in your third equation (where you substitute the complex demodulate X_T in spectral correlation function point estimate) you end up with an inner sum expression with iterative variable n. This variable is also used as the argument of the function S(n,f) and the window function g(n-r) (which is outside of the inner summation). Isn’t there something going wrong in the subsequent derivation due to this conflict in variable definition?

I hope you can help me,

Kind regards,

Vincent

I’m on vacation this week, but I will look into your question next week and provide a substantive reply. I may very well have made a math or notation error! Thanks for the careful read…

In the first paragraph of your comment, I believe you are talking about the complex-valued factor that we use in the TSM. I’ve previously tried to explain the origin of this factor in the TSM post. Can you take a look at that post and let me know if it doesn’t help? We can take it from there…

In the second paragraph, you point out an error in the SSCA development. Yes, there is a problem with the derivation, and some bad choices for notation! I will have to fix this. I don’t believe the basic conclusion will change, though. Thanks very much for pointing me to a flaw in the blog.

Hi Chad,

I’ve been following your blog recently and I really appreciate the detailed contents on cyclostationary. Currently I’m trying to recreate the simulation for SSCA, but I could not get converged coherently (not normalizing to 1).

I did try to use FSM method to estimate the PSD, but they seemingly on different scale altogether, although, with scaling that can be avoided. The result still looks that it didn’t normalizes properly.

With full FSM estimation, I can get it to normalizes properly.

Do you have any pointers on where I should look into?

Can you post a few more details about what is going wrong? It may be helpful to others to see what you are struggling with. You and I may need to take this to email to solve it, but for now, can you let us all know a little more?

We’ll get to the bottom of it eventually.

Thanks Chad for replying quickly.

Here is what I have right now.

Spectral correlation: https://db.tt/kZxefRuS

Spectral coherence: https://db.tt/PRBDKjVR

Those are generated using SSCA with FSM PSD.

I’ve also using the same waveform (your rect bpsk) to calculate using purely FSM method.

Spectral correlation: https://db.tt/VkPA90TU

Spectral coherence: https://db.tt/04Z0dxxb

Thanks, William

It’s hard to tell from the FSM plots, but it looks like the coherence (non-conj) for alpha = 0 is not unity across the band. This is the easiest thing to debug, perhaps, since we know that the coherence should be exactly one for every frequency. (You are just dividing the PSD by itself.) So if that is not coming out ‘1’, then you probably have an indexing error. Either that, or you are using two different PSD estimates, one for the numerator of the coherence, and one for the denominator, and they don’t match.

Here is the PSD comparison: https://db.tt/dsBEDztd

The blue represents FSM PSD estimate, the red dots represent SSCA alpha = 0 estimate

Something looks wrong with the FSM PSD in that plot. Can you send me the data files for both the red and blue dots?

Also, what were the parameters used in the FSM PSD estimate? (block length, width of smoothing window)

I forgot to close this topic thread and offer thanks to Chad for helping me out.

Looking forward to hearing more cs blog in the future.

Hi William S and Chad,

May I know how you managed to resolve your problem of the Coherence function not normalizing properly? I am having the same problem with magnitude scaling.

Currently, I am using matlab’s pwelch function to estimate the PSD, and then scaling the pwelch output to match the PSD estimate from SSCA at alpha=0.

I am sure this is incorrect, but this method of finding coherence using pwelch allows me to better detect spectral peaks much better than SSCA alone.

I have also tried estimating PSD using a frequency-smoothed periodogram, using a rectangular smoothing window of different lengths. However, I still obtain PSD estimates with a different scale from the SSCA.

This is the cyclic domain profile (maximum coherence across all alphas) of my test signal: http://imgur.com/gTUe8mY.

May I have some insights as to how I can solve this problem? Thank you!

Thanks for the comment Calvin. I also let William know you left it so he might reply as well.

I’m not sure if William had enough time to complete his coherence debugging to his satisfaction. Of course, my several implementations of the SSCA produce good coherences.

I think a possible strategy for you is to make sure that your various PSD estimates (SSCA, FSM, pwelch) have the correct shape and scaling independent of each other. That is, process a signal with an exactly known (analytically) PSD, such as white Gaussian noise with unit power (variance). Make sure each PSD is close to one across frequency. Then move on to other signals with known PSDs, such as PSK/QAM with IID symbols.

Also, the degree to which a spectral coherence estimate is accurate depends on the length of the processed data record and the spectral resolutions used in the SSCA and in the off-to-the-side PSD estimate, so there is some art to this task. Coherence can also go awry if the PSD contains discontinuities (e.g., step functions, impulses) or very rapidly fluctuating spectral regions.

What is the signal that you used to generate the estimates in your imgur link? I see that the maximum coherence for alpha=0 is about 2. Since the coherence for alpha=0 (non-conjugate) should be , you clearly have a factor-of-two difference between the psd-on-the-side estimate (denominator) and the SSCA output (numerator).

, you clearly have a factor-of-two difference between the psd-on-the-side estimate (denominator) and the SSCA output (numerator).

Thanks for the input Chad! You gave me a couple of ideas as to how to start debugging the coherence. One possible area I’ll look into is a power correction for pwelch and my PSD estimates. Once I get something working again I’ll update this thread.

As for my input signal, I’m using a srrc 16-QAM signal. Thanks for the help.

If you used a square-root raised-cosine 16-QAM signal with IID symbols to produce the cyclic domain profile shown in your imgur link above, we’ve got bigger problems than a PSD scaling factor. That signal has three non-conjugate cycle frequencies: ,

,  , and

, and  , and no conjugate cycle frequencies. Your plot shows at least

, and no conjugate cycle frequencies. Your plot shows at least  significant cycle frequencies. Seems closer to a rectangular-pulse QAM pattern.

significant cycle frequencies. Seems closer to a rectangular-pulse QAM pattern.

Hi Chad,

First, regarding my previous CDP plot:

You are right, I made a careless mistake in my signal generation. These are the corrected plots for a SRRC 16QAM signal. CDP: http://imgur.com/WetD7O9, Coherence: http://imgur.com/msOwflK.

Next, regarding the problem with normalising, it seems that the best coherence plots come from FSM PSD estimates that strongly mirrors the SSCA PSD (Sx at alpha=0). This means that a SSCA with coarser spectral resolution (i.e. smaller df) requires a corresponding FSM with a larger smoothing window. A FSM that is not sufficiently smoothed will produce spurious peaks in the coherence plot along the alpha=0 axis. These peaks are the cause of the larger than 1 magnitudes at alpha=0 on the CDP. A PSD that is not sufficiently smoothed will produce peaks.

Coherence: http://imgur.com/lwcp4vv

CDP: http://imgur.com/YrL65pM

Conversely, a PSD that is oversmoothed will cause dips below magnitude 1 along alpha=0 of the coherence plot. However, this does not affect the CDP plot.

Coherence: http://imgur.com/3KGP0u7

CDP: http://imgur.com/d1AUtuQ

My conclusion from all these is that the FSM PSD should mirror the SSCA PSD as closely as possible. This makes me question the need for a separate PSD estimate. Why should I be using a fine PSD estimate if ultimately I am smoothing it to match the SSCA PSD, which has a much lower spectral resolution. Would it not be easier just to interpolate the SSCA PSD to match spectral resolution (block length of alpha) and use that in my coherence calculations?

I would appreciate any help as I am quite puzzled by this seemingly contradictory situation. Thank you!

I view the interpolation of the SSCA-produced PSD values as a “separate PSD estimate.” That can definitely work. I would caution you to not make any solid conclusions, though, based on this one rather easy test case with a smooth underlying true PSD.

Chad,

Suggestion for improvement..I have been reading your blog to get a refresher and relearn, but there was an abrupt discontinuity in terms of Equation (1) above. I kind of had to look at other literature to see (if I am correct) your taking cross-correlation between two spectrograms. As a suggestion for improvement, is it possible to state the meaning of some of the variables of Equation (1), and how it came about? Why is there a positive q on S and then a negative q in the parenthesis? What is N? What is N prime?

Thanks

Masoud

I plead guilty to incompletely describing the SSCA! I did mention that a detailed examination of the method is in The Literature [R3]-[R5], and I hope it is clear that I did not author those papers nor did I have a hand in inventing/discovering the technique.

No, that is not correct. I wonder which literature you refer to (please leave citations if you reply to this comment). The SSCA produces a large set of point estimates for the spectral correlation function (SCF), which is described in detail in my SCF post. Spectral correlation refers (in the context of cyclostationary signal processing) not to the correlation of PSDs (“spectra”) or of spectrograms, but of two complex-valued time-series derived from downconverted narrowband components of a data-record or mathematical signal model of interest.

I think most of the variables are defined in the post, including the ones you mention such as and

and  . Which other variables are poorly defined? I added a few notes to the post to connect the time-in-seconds variables to the time-in-samples variables.

. Which other variables are poorly defined? I added a few notes to the post to connect the time-in-seconds variables to the time-in-samples variables.

I don’t quite know how to answer this. “That’s just the way it comes out” is not very satisfying, I know, but it is all I have right now.

Hi Chad, in your FAM post there is a stride parameter L in equation (1) . Can this stride parameter also be used in the SSCA? What affect does changing the size of the stride parameter have on your estimates? I assume that the resolution of

. Can this stride parameter also be used in the SSCA? What affect does changing the size of the stride parameter have on your estimates? I assume that the resolution of  is unaffected by L because the cycle-frequency resolution is 1/N.

is unaffected by L because the cycle-frequency resolution is 1/N.

I’m a little unsure what the frequency axis represents when is non-zero. For

is non-zero. For  the value at some frequency f represents the power contained in that frequency bin. But when

the value at some frequency f represents the power contained in that frequency bin. But when  is non-zero does the value at some frequency f correspond to the correlation between two spectral components that are located at

is non-zero does the value at some frequency f correspond to the correlation between two spectral components that are located at  and

and  ? I probably did a horrible job explaining my question but hopefully it made some sense.

? I probably did a horrible job explaining my question but hopefully it made some sense.

Hi Christopher!

I edited your comment to include the dollar signs around the latex code you wrote. You need to do “DS latex e^x DS” instead of “latex e^x” in WordPress, where DS stands for the dollar sign $. If I type it the way I’m trying to communicate here, it will be typeset, and I can’t figure out how to use \verbatim in WordPress.

Yes, there is a stride parameter in the SSCA as well as in the FAM. See The Literature [R3], [R4] for lots of details. Too much striding will result in cycle leakage and false cycle frequencies in the SCF. I almost never use it in the SSCA. The primary purpose is so that you can reduce the storage for the channelizer values. But memory is plentiful, and so I avoid reconstructing the full-rate channelizer outputs from the strided (subsampled) ones by just storing them all for a stride of unity. Your mileage may vary.

Yes! At least for the non-conjugate SCF. For the conjugate SCF, the interpretation is that the SCF value at some f is the correlation between frequency components at frequencies and

and  . I strongly recommend you look at the full spectral correlation post, especially the material toward the end.

. I strongly recommend you look at the full spectral correlation post, especially the material toward the end.

Thanks Chad! That clears up my questions. I did read your spectral correlation post which is very helpful. I was just struggling making the connection with the frequency axis but now I’m solid.

Hi Dr. Spooner,

I’m hoping this question will be of use to others. As you suggest, I am calculating the coherence by first taking an over-sampled PSD (in this case, N-points, to match the cycle frequency resolution), smoothing it using the frequency-smoothing method, and then finding the appropriate value for the relevant combination of f and alpha when doing the final mapping stage of the spectrum and strip FFTs to the bi-frequency plane. However, the coherence that I compute has some fairly large spikes that seem to coincide with the nulls in the PSD. I suspect that the PSD estimate and the SCF do not quite match up due to the different resolutions, and when the values of each are small, the division stage can cause some spuriously large coherence values just due to noise alone. I was wondering if you encounter this at all and how you mitigate it? Thanks!

Yes, I observe this effect as well. One thing to avoid is processing a noise-free signal using the coherence. The coherence quotient is not well-defined when one of the PSD values is zero. But the quotient can also be ill conditioned when the data contains features that are difficult or impossible to resolve, such as step-functions, impulses, and nulls. Spurious large coherence magnitudes can then result because the values in the denominator don’t properly reflect the variances of the two involved spectral components at (for the non-conjugate case).

(for the non-conjugate case).

To illustrate your question (and verify that I’ve understood it), here are some examples:

The noise I added to remove the large coherence values has one-tenth the power of the signal, so the main-lobe inband SNRs for the signals are still large (20 dB for the rect-pulse signal). The large coherence spikes for rectangular-pulse BPSK are due, as you suggest, to the nulls in the BPSK PSD occurring at ,

,  . The large coherence values for square-root raised-cosine BPSK are due to the frequency intervals outside of the signal band, which have theoretical value of zero, and in these measurements have a very small value.

. The large coherence values for square-root raised-cosine BPSK are due to the frequency intervals outside of the signal band, which have theoretical value of zero, and in these measurements have a very small value.

Evidently, dividing by zero is a problem :-). So a mitigation strategy is to ensure that the denominator of the coherence does not ever get small. Recall that the coherence is given by

So you can add noise to your data prior to processing (ouch!), or you can artifically “inflate” the PSD that you use in your calculations by adding some small constant to it, for example.

Hi Chad,

I’ve implemented the SSCA, primarily using Eric April’s report mentioned and linked to in other comments above. The results generally match your plots in the FSM or TSM posts for the BPSK signal, so I believe I have it mostly correctly implemented.

My uncertainty is related to normalization and the effects of the window functions and

and  . When

. When  and

and  are simple rectangular windows, I get correct results, but the SCF levels vary by several dB when using other windows. I did ensure that the condition in April equation (12) was satisfied –

are simple rectangular windows, I get correct results, but the SCF levels vary by several dB when using other windows. I did ensure that the condition in April equation (12) was satisfied –  . Oh, I did have to add a multiplication by

. Oh, I did have to add a multiplication by  , which I haven’t rigorously verified to be correct.

, which I haven’t rigorously verified to be correct.

Based on my interpretation of April’s recommendations, I’ve mostly been trying to use Kaiser windows with for

for  and

and  for

for  .

.

I can use the exact same (with normalized energy) with my own implementation of Welch’s method for estimating the PSD, and it has no visible effect on the resulting PSD levels. Does this indicate an error in my SSCA implementation, as I suspect? Or what effect should I expect the windows to have on the SCF / SSCA?

(with normalized energy) with my own implementation of Welch’s method for estimating the PSD, and it has no visible effect on the resulting PSD levels. Does this indicate an error in my SSCA implementation, as I suspect? Or what effect should I expect the windows to have on the SCF / SSCA?

Thanks for any clarification!

Good comment, Clint, and I appreciate it. I think I need to add another draft post to the (growing) list: Data Tapering in Spectral Correlation Estimators. For my benefit, yours, and that of all CSP Blog readers.

In the meantime … I looked up April’s report and the condition you mention is there, as it is in the Roberts/Brown/Loomis paper he cites (The Literature [R4]). But I don’t agree with it, and I don’t obey it in my SSCA implementations. In particular, the condition on is not obeyed by me, but the condition on

is not obeyed by me, but the condition on  is.

is.

The function is a genuine data-tapering window, and should be equal to one at its midpoint to preserve the level of the data it is shaping. The function

is a genuine data-tapering window, and should be equal to one at its midpoint to preserve the level of the data it is shaping. The function  is an averaging window. A good choice for

is an averaging window. A good choice for  is simply a rectangle with width

is simply a rectangle with width  and height

and height  .

.

I usually use a Chebyshev data-tapering window , but I can swap that out for MATLAB’s hamming.m or hanning.m or others and still get good results. All those windows have value of one at their midpoint and gracefully decay from there toward zero on either side, but they don’t have unit energy. If you do

, but I can swap that out for MATLAB’s hamming.m or hanning.m or others and still get good results. All those windows have value of one at their midpoint and gracefully decay from there toward zero on either side, but they don’t have unit energy. If you do

x = hamming(64);

y = sum(x .^ 2);

z = sum(x);

you get y = 25.04 and z = 34.1.

The analytical approach to understanding the effects of and

and  is through representing all spectral correlation estimators as general quadratic transformations of the input data, which involves a two-dimensional kernel function. That function usually can be approximated as the product of functions relating to

is through representing all spectral correlation estimators as general quadratic transformations of the input data, which involves a two-dimensional kernel function. That function usually can be approximated as the product of functions relating to  and

and  . See The Literature [R1].

. See The Literature [R1].

I need to revisit all this carefully and attempt to explain it to us all!

Thanks so much, Chad!

The SCF levels output from my SSCA now seem to be independent of N and Np and the window functions, as they should be, and they match yours for the BPSK signal. I still have a normalization factor of 1/N that doesn’t match April’s report, but I’m assuming that isn’t a big deal – maybe a difference in the normalization of our FFT algorithms or an oversight or typo in his report, or I just can’t read again.

I’d love to see [R1] from your Literature page, but the asking price is around $3,000 online at the moment. I’ll see if I can get a copy through my library or something. 😉

Are you using an averaging window that has values of one or values of

that has values of one or values of  ? I use the latter, which implements a straightforward arithmetic mean (average value), but I do it by ignoring

? I use the latter, which implements a straightforward arithmetic mean (average value), but I do it by ignoring  in the main algorithm, and then after the strip FFT, I just scale by

in the main algorithm, and then after the strip FFT, I just scale by  . I do agree that the averaging window should have unit area. So maybe that’s your remaining factor.

. I do agree that the averaging window should have unit area. So maybe that’s your remaining factor.

I just verified that on amazon.com! Whoa! I mean, it is a good book, but not worth anywhere near that price. I wonder if people are actually paying that kind of money for the book. Perhaps I should sell mine!

So it turns out you were right – I was _effectively_ using a with values of

with values of  . I was creating the correct

. I was creating the correct  , but it seems that the section of April’s report that I was using omitted the step where it was applied, and thus I neglected to apply it as well. That is, his equation (31) should also include a multiplication by the matrix

, but it seems that the section of April’s report that I was using omitted the step where it was applied, and thus I neglected to apply it as well. That is, his equation (31) should also include a multiplication by the matrix  .

.

Now I’m working on getting the conjugate version working – it doesn’t seem to be addressed directly in the references I’ve looked at so far, so now I’m trying to actually understand the equations I’ve implemented a little better to catch what the differences are…

Still working out a couple other issues with my SSCA for the conjugate SCF, but am I correct that the only difference is versus

versus  in equation (1)? I had been thinking that I would have to reverse some indices or something as in the conjugate cyclic periodogram, but that doesn’t seem to be the case with what I’ve been able to go through on the math thus far.

in equation (1)? I had been thinking that I would have to reverse some indices or something as in the conjugate cyclic periodogram, but that doesn’t seem to be the case with what I’ve been able to go through on the math thus far.

Of course, five minutes after I post my last comment, I discover a typo in my code, and now my conjugate SCF matches yours for the BPSK signal.

Glad to hear it!

Is the cycle frequency the same as the symbol rate or chip rate?

Thanks for the comment Bo!

For many digital signals, the symbol rate is in fact a non-conjugate cycle frequency. For direct-sequence spread-spectrum signals, the chip rate is typically a cycle frequency. But there can be many more cycle frequencies so, no, the cycle frequency set is not identical to the symbol rate. For many other signals, the dominant cycle frequencies can be related to other aspects of the modulation (for example, OFDM). In the post above, you’ll see an example of the blindly detected cycle frequencies for rectangular-pulse BPSK. That signal has many cycle frequencies, including harmonics of the bit rate.

Cyclostationarity of digital QAM and PSK

Cyclostationarity of DSSS

Gallery of spectral correlation

Gallery of cyclic correlation

Thanks!

I’m confused on the coherence part from the block diagram. Typically I think of coherence as the cross spectral density divided by the product of the auto spectral densities of the two inputs. In this case we’ve already taken the FFT. What am I missing?

Also which pairs are used for coherence calculation? All the channelized inputs go into the block diagram.

The spectral coherence function in CSP is indeed a cross spectral density divided by the (square root of the) product of the involved auto spectral densities. It is just that the cross spectral density involves two signals derived from one waveform. Have you looked at the spectral coherence post? You might appreciate The Literature [R150].

When applying a blind cycle-frequency estimator like the SSCA, I typically compute the coherence for each (f, alpha) pair for which the algorithm produces an estimate of the spectral correlation function. For N input samples and N’ strips or channels, that’s NN’ spectral correlation point estimates for the non-conjugate SCF and another NN’ for the conjugate. But if you have some cycle frequencies in mind, you can skip the SSCA (or FFT accumulation method), and just use the TSM or FSM to estimate the SCF, then use a side PSD estimate to compute the coherence for the known CF and various spectral frequencies f.

Hey Chad,

I’ve been working through implementing the SSCA using your blog and E. April’s report with good results. I’ve quickly come to respect the rather large computational costs. Another SCF estimate algorithm I’ve come across and implemented called the “Fast-SC” algorithm by J. Antoni looks to have some improved computational complexity and some other positive attributes in certain use cases. It’s published here: https://www.researchgate.net/publication/313389754_Fast_computation_of_the_spectral_correlation). After searching your blog and the literature page I didn’t see any references. Have you ever reviewed this estimation algorithm before? I would be curious to know your thoughts on it. The author also has some nicely documented MATLAB code to go along with the paper (https://www.mathworks.com/matlabcentral/fileexchange/60561-fast_sc-x-nw-alpha_max-fs-opt) which is rare!

Brad: Thanks much for stopping by the CSP Blog!

Yes, others have sent me that paper by Antoni. I have a post in the works. (Update December 2021: The post is published.)

For now, I’ll just say my preliminary conclusion is that the Antoni estimator can be faster than “FSM in a loop” or “TSM in a loop” for small ranges of cycle frequency. But it is still slower than a properly implemented SSCA, even without parallelization. That is, for the example in the code you linked to, Antoni looks at a range of cycle frequencies equal to![[0, 0.04]](https://s0.wp.com/latex.php?latex=%5B0%2C+0.04%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) in normalized Hz, and when I run it (as is, using his signal but extended to

in normalized Hz, and when I run it (as is, using his signal but extended to  samples), that takes about 0.75 seconds (not including any of the plotting, just the function call). When I run my best C-based SSCA, I get the entire SCF and the entire coherence function, as well as coherence-based thresholding and cycle-frequency binning, reducing the entire calculation to the two (correct) detected cycle frequencies, it takes 0.4 seconds. A good MATLAB SSCA implementation I have does the same calculation, but it takes 1.6 seconds. So the C-based SSCA is faster, and it does a lot more than Antoni’s calculation, and the MATLAB-based SSCA is arguably faster in that it also does a lot more than look at that narrow set of cycle frequencies. When I put the FSM in a loop over Antoni’s restricted range of cycle frequencies, it takes 4.7 seconds, which includes the SCF estimate, the coherence estimate, and the maximization of the coherence magnitude over frequency.

samples), that takes about 0.75 seconds (not including any of the plotting, just the function call). When I run my best C-based SSCA, I get the entire SCF and the entire coherence function, as well as coherence-based thresholding and cycle-frequency binning, reducing the entire calculation to the two (correct) detected cycle frequencies, it takes 0.4 seconds. A good MATLAB SSCA implementation I have does the same calculation, but it takes 1.6 seconds. So the C-based SSCA is faster, and it does a lot more than Antoni’s calculation, and the MATLAB-based SSCA is arguably faster in that it also does a lot more than look at that narrow set of cycle frequencies. When I put the FSM in a loop over Antoni’s restricted range of cycle frequencies, it takes 4.7 seconds, which includes the SCF estimate, the coherence estimate, and the maximization of the coherence magnitude over frequency.

So from those results, it looks like you can use Antoni’s method when you want to do FSM-in-a-loop style estimation for a small range of cycle frequencies, and you’ll get a benefit.

But if you increase the range of cycle frequencies in the Antoni code a bit, from![[0, 0.04]](https://s0.wp.com/latex.php?latex=%5B0%2C+0.04%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) to

to ![[0, 0.2]](https://s0.wp.com/latex.php?latex=%5B0%2C+0.2%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) , the code takes 27.5 seconds, whereas my FSM-in-a-loop takes 21.8 seconds. Antoni suggests modifying the Nw parameter. So I guess you have to choose the right Nw parameter for each combination of data length and cycle-frequency range. The SSCA execution times are unchanged, of course.

, the code takes 27.5 seconds, whereas my FSM-in-a-loop takes 21.8 seconds. Antoni suggests modifying the Nw parameter. So I guess you have to choose the right Nw parameter for each combination of data length and cycle-frequency range. The SSCA execution times are unchanged, of course.

I will have some comments on the paper too, and I’m not sure what exactly is being computed in the code. For example, the maximum allowable cycle frequency is rather than

rather than  . This can happen when one does direct computation of the cyclic autocorrelation, for example, because the sine wave that you need to multiply the lag product by cannot be unambiguously represented for

. This can happen when one does direct computation of the cyclic autocorrelation, for example, because the sine wave that you need to multiply the lag product by cannot be unambiguously represented for  , but frequency-domain methods (and I thought Antoni’s was a frequency-domain method) don’t suffer from this problem. The nominal range of cycle frequency is

, but frequency-domain methods (and I thought Antoni’s was a frequency-domain method) don’t suffer from this problem. The nominal range of cycle frequency is ![[-f_s, f_s]](https://s0.wp.com/latex.php?latex=%5B-f_s%2C+f_s%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) and the range for spectral frequency is

and the range for spectral frequency is ![[-f_s/2, f_s/2]](https://s0.wp.com/latex.php?latex=%5B-f_s%2F2%2C+f_s%2F2%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) . The input data is real-valued, and the input cycle frequency range cannot contain negative cycle frequencies, which are both big problems for normal complex-envelope processing, in which the conjugate cycle frequencies are often negative (e.g., if the carrier frequency offset is negative, the doubled-carrier conjugate cycle frequency will be negative).

. The input data is real-valued, and the input cycle frequency range cannot contain negative cycle frequencies, which are both big problems for normal complex-envelope processing, in which the conjugate cycle frequencies are often negative (e.g., if the carrier frequency offset is negative, the doubled-carrier conjugate cycle frequency will be negative).

Good evening Chad. Did you complete the post on Antoni’s work you allude to in this comment?

Thanks,

John

Yes, I published a detailed post on it in October.

Hi Dr. Spooner,

I am just starting to learn about this subject matter before I attempt to write some code for probably either the FAM or SSCA method. My initial question is a high level one and may seem pretty basic (and will likely reveal my ignorance).

In my application the signal to be processed is fed from a single channel of an already existing polyphase channelizer. The selected channel output signal is thus already bandpass filtered (and as well is complex and basebanded). Given that, when looking at Fig. 6 of the Roberts-Brown-Loomis paper, or Fig. 1 of you SSCA blog, I see the system input being passed through bandpass filters. I don’t think I need those. Yes? No?

Thanks,

Brian

Welcome to the CSP Blog Brian! And to the field.

Yes, you do need those bandpass filters in the SSCA, which I denote in Figure 1 by the generic term “channelizer,” which as you know is just a bank of filters.

The provenance of your data to be processed doesn’t matter to the SSCA or FAM algorithms. The front-end channelizer in the SSCA determines the spectral resolution of the spectral correlation measurement, which is not dependent on the way that the input data is obtained. In other words, when we do spectral correlation analysis on some sampled data, the various ways that the original RF energy is processed (frequency conversions, filtering, level shifting, etc.) to eventually obtain a sequence of complex values corresponding to a sampling rate of Hz does not matter.

Hz does not matter.

Perhaps your channelizer produces channels with width 1 MHz, perhaps only 10 kHz, I don’t know. But the bottom line is that you apparently wish to process the complex samples corresponding to one of those channels. Suppose the width is 1 MHz. Then maybe the channel contains some signals with width 200 kHz, or maybe the channels contain only portions of a 5-MHz-wide signal. The starting point for spectral correlation analysis is the same: apply a bank of bandpass filters to the incoming data.

The width of those front-end channelizer filters in the SSCA determines the spectral resolution of the measurement, which is intimately related to the quality of the measurement through the resolution product.

So your choice of channelizer parameters in your data-acquisition system is driven by your application, and the choice of the channelizer parameters in the SSCA is driven by your computational complexity constraints and your desired estimator-output quality. The number of channels in the channelizer and the length of the processed data block determine the computational cost.

I recommend reading up on the resolution product and the three resolutions in CSP.

Have I answered your question or did I misinterpret?

No misinterpretation. FYI, my polyphase channelizer has a 2x OS architecture with a signal BW per channel 0f 1.8 MHz (fsamp = 3.6 MHz).

Your answer is understood, thanks much.

I am now getting good results for BPSK signals. Can easily see carrier frequency and chip duration at distinct alpha values. Results not so good for QPSK or QAM. Is it possible to upload plots?

Brian’s plot added by The Management:

Do you mean “doubled carrier frequency” instead of “carrier frequency”?

The reason you cannot get an estimate of the doubled carrier frequency with accuracy on the order of the cycle-frequency resolution (reciprocal of the processed-block length) is that the QPSK and 4QAM signals do not possess conjugate cyclostationarity. That is, their second-order cumulants for or

or  are identically zero. Equivalently, the conjugate spectral correlation function is identically zero (the surface of the theoretical function is zero-valued). So you see the nice spikes at twice the carrier (8.2 MHz) for BPSK, but just kind of a blob for QPSK and 4QAM. Another way of saying this is that for QPSK and 4QAM (and all the other PSK and QAM signals with constellations that have more than two points and a two-fold symmetry in the real/imag plane) there are no second-order cycle frequencies relating to the carrier.

are identically zero. Equivalently, the conjugate spectral correlation function is identically zero (the surface of the theoretical function is zero-valued). So you see the nice spikes at twice the carrier (8.2 MHz) for BPSK, but just kind of a blob for QPSK and 4QAM. Another way of saying this is that for QPSK and 4QAM (and all the other PSK and QAM signals with constellations that have more than two points and a two-fold symmetry in the real/imag plane) there are no second-order cycle frequencies relating to the carrier.

However, many of these signals have non-zero cyclic cumulants for , and since the general formula for their cycle frequencies is

, and since the general formula for their cycle frequencies is  , you can get an estimate of

, you can get an estimate of  with accuracy about equal to the cycle-frequency resolution. But then you have to do quartic nonlinear processing to the signal instead of just quadratic, like for BPSK, so there is a noise penalty.

with accuracy about equal to the cycle-frequency resolution. But then you have to do quartic nonlinear processing to the signal instead of just quadratic, like for BPSK, so there is a noise penalty.

Simple suggestion: Try squaring your signal first, then apply what you’re already doing. You’ll need to watch out for aliasing if the carrier frequency offset is a significant fraction of the sampling rate.

Before I go on to study higher order cyclo, I should mention that indeed squaring the signal enables SCCA to produce nice spectral lines at 4fc for QPSK and MQAM (at least up to a 64 point square constellation).

Interestingly 8PSK did not work so well, but when I square the signal yet again, I get a nice spectral line at 8fc.

Thanks Dr. S.

You’re quite welcome!

Yes, you need at least an eighth-order nonlinearity to force each 8PSK constellation point to map to a single constellation point, which then leads to a constant component in the complex envelope (perfectly downconverted) signal, or to an additive sine-wave component with frequency in an imperfectly downconverted signal (here

in an imperfectly downconverted signal (here  is the carrier frequency offset). This works but really suffers when there is significant noise present in the signal band.

is the carrier frequency offset). This works but really suffers when there is significant noise present in the signal band.

You can predict the minimum required order of nonlinearity by looking at the tables of moments and cumulants in the PSK/QAM post.

Hi Chad.

I think I’m missing something. You state above, “The demodulates are formed by short-duration (N’) FFTs,” where N’ is also the number of channels. Similarly, R4 in The Literature states, “a(r) is a data tapering window of length T=N’Ts seconds. For convenience, the quantity $\delta a$ is defined to be the bandwidth of the input filters; hence for this system the bandwidth of the input filters is also the frequency resolution of the estimate…”

It seems like N’ defines two things: the frequency resolution of each channelizer FFT, and the number of channels). I don’t understand why (or if) they need to be the same.

The kids are back in school, hence I am sleep deprived. Please forgive me if I’m missing something obvious.

So the way I look at it is that we define the number of channels–the number of data samples we Fourier transform in the sliding-FFT function at the beginning of the SSCA algorithm–and the tapering window , and the frequency resolution follows from those choices and the basic properties of the Fourier transform.

, and the frequency resolution follows from those choices and the basic properties of the Fourier transform.

Agree?

“Agree?” I think so. I’ll try to summarize my understanding, then ask a pair of related questions.

The axis of the

axis of the  plane is divided into N’ points by our SSCA strips (channels). That’s the simplest way for me to visualize the relationship between N’ and frequency resolution, although I understand the shrinking region of SCF support for other values of

plane is divided into N’ points by our SSCA strips (channels). That’s the simplest way for me to visualize the relationship between N’ and frequency resolution, although I understand the shrinking region of SCF support for other values of  . Additionally, R4 presents the concept of a diamond-shaped Cyclic Spectrum Analyzer (CSA) cell. The CSA cell covers the continuous area in the

. Additionally, R4 presents the concept of a diamond-shaped Cyclic Spectrum Analyzer (CSA) cell. The CSA cell covers the continuous area in the  plane that contributes to a single point estimate. The axis of the CSA diamond aligned with the frequency axis has a width (in normalized units) of 1/N*, where N* is the number of samples passed by

plane that contributes to a single point estimate. The axis of the CSA diamond aligned with the frequency axis has a width (in normalized units) of 1/N*, where N* is the number of samples passed by  . So the SSCA frequency resolution is still set by N’, but you might as well match the width of the CSA cell (1/N*) with the frequency spanned by the SSCA strip (1/N’). Hence the statement quoted above from R4, beginning with “For convenience…” You also need to design

. So the SSCA frequency resolution is still set by N’, but you might as well match the width of the CSA cell (1/N*) with the frequency spanned by the SSCA strip (1/N’). Hence the statement quoted above from R4, beginning with “For convenience…” You also need to design  to minimize its transfer function’s sidelobes to avoid cycle leakage, etc., etc.

to minimize its transfer function’s sidelobes to avoid cycle leakage, etc., etc.

Q1: When you say “sliding” FFT, do you mean there is overlap in the time domain samples (windowed by ) used to generate successive FFT outputs? The SCF implementation I took off our shelf does that.

) used to generate successive FFT outputs? The SCF implementation I took off our shelf does that.

Q2: R4 seemed to clearly indicate (though I don’t have the passage handy to quote) the channelizer is outputting frequency domain samples that are then point-by-point multiplied with the time domain data that generated them. Heaven forbid I doubt Bill Brown, but that feels so counterintuitive that I can’t help baulking. Am I understanding R4 correctly?

PS: I’m rusty on LaTeX, let alone its use in WordPress. If my response looks like garbage, I’d appreciate a little clean up by The Management.

Thanks for using latex! You don’t need the ” ” around the latex format strings. Also, make sure there isn’t a space between the first dollar sign and the keyword latex. I fixed the latex format strings in the comment.

Yes. The parameter is typically called in the SSCA descriptions. It sets the amount of overlap between successive

in the SSCA descriptions. It sets the amount of overlap between successive  data subblocks that are to be tapered and DFT’d. I typically use

data subblocks that are to be tapered and DFT’d. I typically use  , meaning that we slide over by a single sample to obtain the next subblock. The motivation for larger

, meaning that we slide over by a single sample to obtain the next subblock. The motivation for larger  , which means less overlap than for

, which means less overlap than for  , is to save memory. Larger

, is to save memory. Larger  means you’re effectively subsampling the channelizer outputs. But memory isn’t a big deal, and you have to ensure that the channel sequences are back up to the input-data rate before multiplying them by the input data anyway, so

means you’re effectively subsampling the channelizer outputs. But memory isn’t a big deal, and you have to ensure that the channel sequences are back up to the input-data rate before multiplying them by the input data anyway, so  avoids the required interpolation step when

avoids the required interpolation step when  .

.

This is an important concept to bring up. Clearly we say that the FFT produces a set of ‘frequency-domain points’ for an

‘frequency-domain points’ for an  -sample time-domain input. But here we are applying the FFT to a sequence of data blocks, so we obtain a sequence of ‘frequency-domain vectors.’ Just focus on one of the FFT bins. We can associate the sequence of FFT values for that bin with a time index–the start time of the block (or the middle time, or end time, or whatever). So now we have a time-domain signal, produced by a sequence of frequency-domain operations (the FFTs). That is, the action of the channelizer step in the SSCA is to produce

-sample time-domain input. But here we are applying the FFT to a sequence of data blocks, so we obtain a sequence of ‘frequency-domain vectors.’ Just focus on one of the FFT bins. We can associate the sequence of FFT values for that bin with a time index–the start time of the block (or the middle time, or end time, or whatever). So now we have a time-domain signal, produced by a sequence of frequency-domain operations (the FFTs). That is, the action of the channelizer step in the SSCA is to produce  individual temporal sequences. If

individual temporal sequences. If  , then these temporal sequences are sampled at the same rate as the input signal. So we can interpret the multiplication of one of the channelizer output sequences by the input data as the multiplication of two time-domain signals.

, then these temporal sequences are sampled at the same rate as the input signal. So we can interpret the multiplication of one of the channelizer output sequences by the input data as the multiplication of two time-domain signals.

It is like how we might speak about the output of a bandpass filter. On the one hand, we can interpret the BPF as multiplying the Fourier transform of the input by the Fourier transform of the impulse-response function and then inverse transforming–lots of frequency-domain ideas. On the other hand, the output of the BPF is also exactly equal to the convolution of the impulse-response function with the time-domain signal–zero frequency-domain ideas.

The channelizer here is just a (relatively crappy) bank of bandpass filters.

You buying what I’m selling here?

Hold the phone. Am I understanding correctly that each channel out of the channelizer is just one of the FFT bins? I.e., is the procedure: samples using the window defined by

samples using the window defined by  ;

; );

); and repeat steps 1-3 until

and repeat steps 1-3 until  samples have been generated for the longer strip FFTs?

samples have been generated for the longer strip FFTs?

1. Select

2. Take the FFT of those samples;

3. Multiply each bin by the reference sample (e.g. sample

4. Increment by 1 the samples windowed by

If the above is correct, is it the same approach analyzed by R4? In particular, I’d like to verify their estimates of computational complexity are applicable.

Yes, I think your 4-step description is consistent with the SSCA point-estimate formula.

However, let me try to clarify, although perhaps I will be perceived as pedantic.

The samples you transform with the -point channelizer FFT are not defined by the taper

-point channelizer FFT are not defined by the taper  . They are merely defined by sliding along with time (sample index) and picking up the next

. They are merely defined by sliding along with time (sample index) and picking up the next  points. You then apply the taper to those points.

points. You then apply the taper to those points.

I believe this is correct. However, it is typically more efficient and perhaps easier to code if you do all of the channelizer FFTs first. Then you take each channelizer sequence (corresponding to one of the FFT bin indices over time [samples]) and multiply by the original input data. Then take the

FFT bin indices over time [samples]) and multiply by the original input data. Then take the  -point FFT.

-point FFT.

Yes. You can see my complexity analysis here.

This has been so hard for me to wrap my head around.

The conclusion I’ve come to is that takes in a time-domain signal and outputs a time-domain signal, just as if it was using a non-FFT-based channelizer (mixer+lpf), but with greater computational efficiency.

takes in a time-domain signal and outputs a time-domain signal, just as if it was using a non-FFT-based channelizer (mixer+lpf), but with greater computational efficiency.

This is accomplished by not summing for each , like we would for in a traditional FFT use-case.

, like we would for in a traditional FFT use-case.

I think the notation to describe this process is where the confusion boiled down to.

to describe this process is where the confusion boiled down to.

I finally understand it now, though.

Once again. Thank you.

What do you mean by ‘not summing for each f?’

Eq (2) is:

If you fix and

and  , you just have the finite-time Fourier transform of the signal

, you just have the finite-time Fourier transform of the signal

. If you consider a collection of

. If you consider a collection of  , with fixed

, with fixed  , you’ve got a time-series

, you’ve got a time-series for

for that are in the collection.

that are in the collection.

(signal) that corresponds to the complex-valued Fourier transform at frequency

the instants of time

If you consider a collection of and also a collection of

and also a collection of  , then you

, then you corresponding to your

corresponding to your .

.

have a vector of Fourier transform values for each

collection of frequencies

If you let the collection of frequencies lie on a regular grid and their

lie on a regular grid and their , then you can perform the calculation for each

, then you can perform the calculation for each  using

using

number is

the fast Fourier transform.

When slides along in time, and we use

slides along in time, and we use  frequencies

frequencies  , then

, then  represents the output of an FFT filterbank. See also My Papers [31].

represents the output of an FFT filterbank. See also My Papers [31].

Excellent. I got 90 minutes of sleep last night, so spelling things out is much appreciated.

I got the SSCA working and figured I should qualify the outline I made above by mentioning a step I initially overlooked. Equation 2 in R4, for calculating the demodulates, differs from the definition of the FFT (e.g. in scipy, or Matlab) in the relationship between the limits of integration and the time shift of the input and the FFT’s complex exponential. Massaging the math in Eq. 2 made the necessary reference sample and other tweaks apparent.

Glad to hear you’ve got the SSCA working, and thanks for closing the loop with this comment John.

The discrepancy between the limits of the sum in typical software-package FFT calls and the limits in the continuous-time sliding FFTs in CSP is a continual pain. I’ve pointed it out for the TSM and FAM, and of course it applies here too. Really appreciate your confirmation and comment, which helps CSP Blog readers for sure.

Hello dr.Spooner,

nice post. I just want to ask if I properly understand how to set detla N to achieve good resolution in frequency so if I set N from 8 to 128 with sampling frequency 2k. That means I have resolution in time from 0.0004 to 0.064 or in frequency from 15.6 to 2500 ? I think I understand how to set delta alpha ( if I want to achieve resolution in Baud rate I set alpha from 0.005 to 0.5 with 0.005 step when I apply Fs.*alpha in final CAF figure). I have also code in Matlab. Thank you for your response. Have a nice day.

My code:

clc;

clear all

close all

%%%%%%%%%%%%

M = 2;

Fc = 200;

Fs = Fc*10;

n_bit = 2000;

Fb = 50;

Td = n_bit/Fb; % Time duration, sec

spb = Fs/Fb;

fSpan = 2;

N_psd = 1024;

%%%%%%%%%%%%

data = randi([0 M-1],n_bit,1);

dmodData = (pskmod(data,M));

dmodData = awgn(dmodData,3,’measured’);

txFilter = comm.RaisedCosineTransmitFilter(“Shape”,”Square root”,”RolloffFactor”,0.3,”FilterSpanInSymbols”,fSpan,”OutputSamplesPerSymbol”,spb);

txfilterOut = txFilter(dmodData)’;

time = 0:1/Fs:Td-1/Fs; %

freq = 0:1/(Td-1/Fs):Fs; %

% figure(420)

% plot(time,txfilterOut)

carrier = exp(1j*2*pi*Fc.*time); %

txfilterOut = txfilterOut.*carrier; %

% figure(1)

% plot(time,real(txfilterOut),’r’)

% hold on

% plot(time,imag(txfilterOut),’b’)

% title(‘time domain’)

spek = (abs(fft(txfilterOut)));

% figure(4)

% plot(freq,spek)

% title(‘freq. domain’)

num_blocks = floor(length(txfilterOut) / N_psd);

S = txfilterOut(1, 1:(N_psd*num_blocks));

S = reshape(S, N_psd, num_blocks);

I = fft(S);

I = I .* conj(I);

I = sum(I.’);

I = fftshift(I);

I = I /(num_blocks * N_psd);

freq_c = linspace(-Fs/2,Fs/2,N_psd); %[0:(N_psd-1)]/N_psd – Fs/2;

% figure(666)

% plot(freq_c,10*log10(I))

% title(‘PSD’)

carrier = exp(-1j*2*pi*Fc.*time); %

txfilterOut = txfilterOut.*carrier; %

spek = (abs(fft(txfilterOut)));

% figure(3)

% plot(freq,spek)

% title(‘demo’)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

alpha = 0.005:0.005:0.5; %Resolution in Baud rate 10 (from 10 to Fs/2) %%%%%%%%%%%%%%%%%%%%%%%%

deltN = 128:32:256; %Resolution in Baud rate 10 (from 10 to Fs/2)

CAFko = zeros(1,length(alpha));

for i = 1:length(alpha)

for j = 1:length(deltN)

num_blocks = floor(length(txfilterOut) / deltN(j));

txfilterOutte = txfilterOut(1, 1:(deltN(j)*num_blocks));

modulplu = txfilterOutte.*exp(1j*2*pi*(Fc+alpha(1,i))/2.*[1:(deltN(j)*num_blocks)]); %

modulminu = txfilterOutte.*exp(1j*2*pi*(Fc-alpha(1,i))/2.*[1:(deltN(j)*num_blocks)]); %

modulplu = reshape(modulplu, deltN(j), num_blocks);

modulminu= reshape(modulminu, deltN(j), num_blocks);

x1 = fft(modulplu);

x2 = fft(modulminu);

I = x1 .* conj(x2);

Isum = sum(I.’);

Isum = fftshift(Isum);

Iabsft = Isum /(num_blocks * deltN(j));

CAFko(1,i) = CAFko(1,i) + sum(abs(fftshift(ifft(Iabsft))));

end

end

alpha = Fs.*alpha;

figure(2)

stem(alpha,CAFko)

title(‘SSCA CAF’)

I’m not sure what you are trying to do here. Are you trying to implement the SSCA?

Also I don’t recall using ‘delta N’ in the post (your ‘detla N’). You don’t want to use such small values for N, the total number of processed samples, in any CSP algorithm.

To implement the SSCA, you need to channelize your data (apply a sliding FFT with length N’ and retain the resulting N’ complex-valued signals each with length N), then multiply each of those channels by the input signal data, and finally Fourier transform (N points) each of those N’ vectors.

Sorry I didnt understand how deep the problem is. So please I have a few questions which came in way how to perform mentioned channelizer. The steps to obtain channelized data if I understanded right is:

1. get N’ points from x(t) so N is x(0) to x(N-1)

2. repeat the process with incremented position in x(t) by 1

3. get signal with length N from steps 1 and 2

4. multiply signal from 3rd step with input signal x(t)

5. fft the result from 5th step

This is how I understanded it from your comment.

When I search for some info in web Im searching for fft filter bank but I dont see any connection with sliding FFT. When I search for sliding FFT I get info about sliding DFT and the Goertzel algorithm. Sorry for poor knowledge in channelizer problematics but Im trying to do my best. Thank you for your answer and patience.

The channelizer in the SSCA is the implementation of equation (2), where is discrete time and

is discrete time and  is discrete frequency. In terms of your steps above, the channelizer is steps 1-3 with the addition that each obtained block of

is discrete frequency. In terms of your steps above, the channelizer is steps 1-3 with the addition that each obtained block of  points of

points of  are Fourier transformed. Then your steps 4 and 5 go on to implement the remaining portions of the SSCA algorithm.

are Fourier transformed. Then your steps 4 and 5 go on to implement the remaining portions of the SSCA algorithm.

I found solution in commP25ssca.m which is function in Matlab. Now I think I can implement channelizer but after that they use in SSCA step which is called replication and multiplication. After that they just compute SCF. I saw your post on this function and Im trying to understand it. I set L = 1, but I have stuggle with delta_alpha and delta_f. In your post you set the parameters delta_alpha = 1/65536 and delta_f = 0.0625 with sampling frequency 1. I have no clue how to properly set those parameters with sampling frequency not equal to one like in your example.

btw: sorry for posting code like this but is there any other way how to show you my progress?

My code:

clc;

clear all

close all

%%%%%%%%%%%%

M = 2;

Fc = 200;

Fs = Fc*10;

n_bit = 1000;

Fb = 200;

Td = n_bit/Fb; % Time duration, sec

spb = Fs/Fb;

fSpan = 2;

N_psd = 1024;

%%%%%%%%%%%%

data = randi([0 M-1],n_bit,1);

dmodData = (pskmod(data,M));

txFilter = comm.RaisedCosineTransmitFilter(“Shape”,”Square root”,”RolloffFactor”,0.3,”FilterSpanInSymbols”,fSpan,”OutputSamplesPerSymbol”,spb);

txfilterOut = txFilter(dmodData)’;

time = 0:1/Fs:Td-1/Fs;

freq = 0:1/(Td-1/Fs):Fs;

%%%%%%%%%%%%

carrier = exp(1j*2*pi*Fc.*time);

xx = txfilterOut.*carrier;

fs = Fs;

dalpha = fs/65536;

df = fs/16;

N = fs/dalpha;

Np = fs/df;

disp(‘observation time T in discrete domain ‘ + string(fs/N) + ‘ seconds’)

disp(‘window time Tw in discrete domain ‘ + string((fs/Np)) + ‘ seconds’)

L = 1;

Npl = N/L;

NN = (Npl)*L+Np;

if length(xx)<NN

xx(NN) = 0;

else

xx = xx(1:NN);

end

x = zeros(Np,Npl);

for k = 0:Npl-1

x(:,k+1) = xx(k*L+1:k*L+Np);

end

a = hamming(Np);

XWin = a.*x;

XFFT = fftshift(fft(XWin));

X = [XFFT(:,Npl/2+1:Npl) XFFT(:,1:Npl/2)]; %-N/2 N/2

E = zeros(Np,Npl);

for idxl = -Np/2:Np/2-1 % length of l is Np

for k = 0:Npl-1 % length of k is P

E(idxl+Np/2+1,k+1) = exp(-1i*2*pi*k*L*idxl/Np);

end

end

XT = X.*E;

XRep = zeros(Np,Npl*L);

for k = 1:Npl

XRep(:,(k-1)*L+1:k*L) = XT(:,k)*ones(1,L);

end

% Multiplication

% Convert input vector into a matrix, the vector first becomes a row

% vector, and repeat Np times. xc is (Np,N), the same size as XR

inputRep = xx(1:Np)';

XMul = conj((XRep.*inputRep)');

% Centered N-point FFT (zero frequency in the 0 bin)

XFFT2 = fftshift(fft(XMul));

XFFT2 = [XFFT2(:,Np/2+1:Np) XFFT2(:,1:Np/2)]; % Output of Figure 5

M = abs(XFFT2);

% Convert M to Sx

Sx = zeros(Np+1,2*N+1);

for k1 = 1:N

k2 = 1:Np;

alpha = (k1-1)/N + (k2-1)/Np – 1;

f = ((k2-1)/Np – (k1-1)/N) / 2;

k = 1 + Np*(f+0.5);

n = 1 + N*(alpha+1);

for k2 = 1:Np

Sx(round(k(k2)),round(n(k2))) = M(k1,k2);

end

end

alphao = (-1:1/N:1)*fs; % cyclic frequency (alpha)

fo = (-0.5:1/Np:0.5)*fs; % spectral frequency (f)

figure(1)

surf(alphao,fo,Sx)

shading flat

xlabel('alpha');

ylabel('f');

zlabel('Sx');

You could use imgur to post plots?

The parameters that you can control in CSP algorithms are things like the number of processed samples and the length of a channelizer (SSCA) or FFT (TSM)

and the length of a channelizer (SSCA) or FFT (TSM)  . Those choices determine the cycle-frequency resolution, the temporal resolution, and the spectral resolution. They also determine the computational cost.

. Those choices determine the cycle-frequency resolution, the temporal resolution, and the spectral resolution. They also determine the computational cost.

The cycle-frequency resolution is , the spectral resolution is

, the spectral resolution is  , and the temporal resolution is

, and the temporal resolution is  . You want to always pick

. You want to always pick  and

and  so that the resolution product is large (

so that the resolution product is large ( ).

).

Moreover, you want to pick large enough that you can process a sufficient number of the involved random variables (bits or symbols, say).

large enough that you can process a sufficient number of the involved random variables (bits or symbols, say).

Before you can really get good at writing the code and, especially, using it, you have to understand the basics of the underlying estimator concepts. So look at the posts linked to in this reply and see if that helps.

Hello Dr. Spooner,

I’m trying to undertand the channelizer step of SSCA. I used your BPSK signal code without noise stored in x_of_t value. If I understood it correctly I shoud have in my channelizer XT value from eq.2 . I’ve read some articles in whitch is written about mentioned sliding FFT (https://ccrma.stanford.edu/~jos/sasp/DFT_Filter_Bank_I.html and also https://www.comm.utoronto.ca/~dimitris/ece431/slidingdft.pdf). The sliding FFT is spectial type of STFT with rectangular window (tapper) which I have already implemented in my code. The question is for to increment f in XT(n,f) from eq.2.

As I said I used your x_of_t variable so I rather dont post all your bpsk code here.

If you be so kind and please look at my part of code:

nframes = 16;

N = 4086;

ReferenceSample = fft(x_of_t(1:N));

window = rectwin(N)’; %rectwin in sliding FFT

for i=1:nframes

k = [i:i+N-1];

tapper = window.*x_of_t(k);

bin = fft(tapper);

channelizer(i,:) = bin.*ReferenceSample;

end

Thank you

I see I forgot to reply to this comment Miropele. Sorry about that. Are you still interested in an answer, or have you moved on?

I want to apologize about previous comment. I spotted numeroud elementar mistakes after posting the comment. My code shoud look like this not like in the previous comment.

My code:

nframes = 8;

N = 4096;

ReferenceSample = fft(x_of_t(1:N));

window = rectwin(N)’; %rectwin in sliding FFT

for i=1:nframes

k = [i:i+N-1];

tapper = window.*x_of_t(k);

bin(i,:) = fftshift(fft(tapper));

end

alpha = linspace(0,1/2,nframes);

for i=1:nframes

k = [i:i+N-1];

E(i,:) = conj(exp(1j*2*pi*(1-alpha(1,i))/2*k/N));

end

XT = bin.*E;

frek = linspace(-1/2,1/2,N);

alf = alpha’;

[efko,alk] = meshgrid(frek,alf);

figure(1)

surf(alk,efko,10*log10(abs(bin)))

This is the question you posed in the previous comment. I assume it is the question here, since there is no new question.

Unfortunately, I don’t understand the question due to grammar and it might be incomplete (?).

Are you trying to visualize the output of the channelizer in the SSCA?

If the data-tapering window is a rectangle, you do not need to actually do the taper multiplication. If the taper is a unit-height rectangle, you can just ignore it completely. If it is a rectangle with non-unit height, you can account for it later in the processing, by scaling.